Why Edge Computing?

AI on MCUs enables cheaper, lower power and smaller edge devices. It reduces latency, conserves bandwidth, improves privacy and enables smarter applications.

Software engineering can be fun, especially when working toward a common goal with like-minded people. Ever since we started the uTensor project, a microcontrollers (MCUs) artificial intelligent framework, many have asked us: why bother with edge computing on MCUs? Aren’t the cloud and application processors enough for building IoT systems? Thoughtful questions, indeed. I will attempt to show our motivation for the project here, hopefully, you would find them interesting too.

The blog is also published as a Medium post.

What are MCUs?

MCUs are very low-cost tiny computational devices. They often found in the heart of IoT edge devices. With 15 billion MCUs shipped a year, these chips are everywhere. Their low-energy consumption means they can run for months on coin-cell batteries and require no heatsinks. Their simplicity helps to reduce the overall cost of the system.

An image of Mbed development boards

An image of Mbed development boards

Unutilized power

The computational power of MCUs has been increasing over the past decades. However, in most IoT applications, they do nothing more than shuffling data from sensors to the cloud. MCUs are typically clocked at hundreds of MHz and packaged with hundreds of KB of RAM. Given the clock-speed and RAM capacity, forwarding data is a cakewalk. In fact, the MCUs are idle most of the time. Let’s illustrate this below:

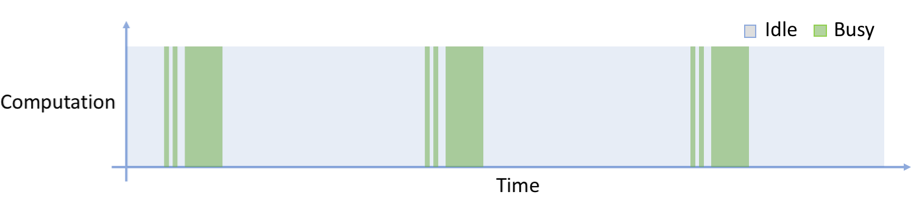

A representation of MCU’s busy vs idle time for typical IoT applications

A representation of MCU’s busy vs idle time for typical IoT applications

The area of the graph above shows the computational budget of the MCU. Green areas indicate when the MCU is busy, this can include:

- Networking

- Sensor reading

- Updating displays

- Timers and other interrupts The blue areas represent the idle, untapped potential. Imagine millions of such devices deployed in the real world, that is collectively a lot of unutilized computational power.

Adding AI to edge devices

What if we can tap into this power? Can we do more on the edge? It turns out, AI fits perfectly here. Let’s look at some ways we can apply AI on the edge:

Inferencing

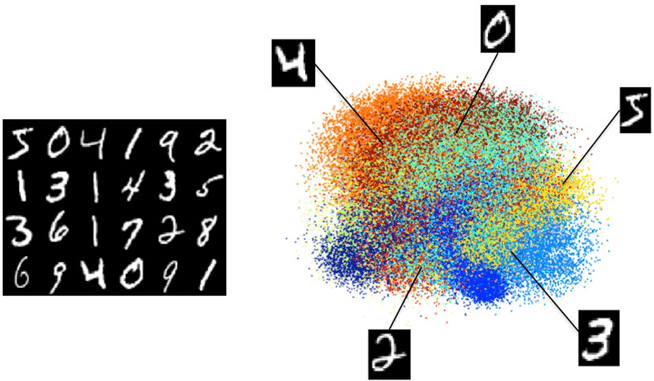

Simple image classification, acoustic detection and motion analysis can be done on the edge device. Because only the final result is transmitted, we can minimize delay, improve privacy and conserve the bandwidth in IoT systems.

Projection into a 3D space (via PCA) of the MNIST benchmark dataset. source

Sensor Fusion

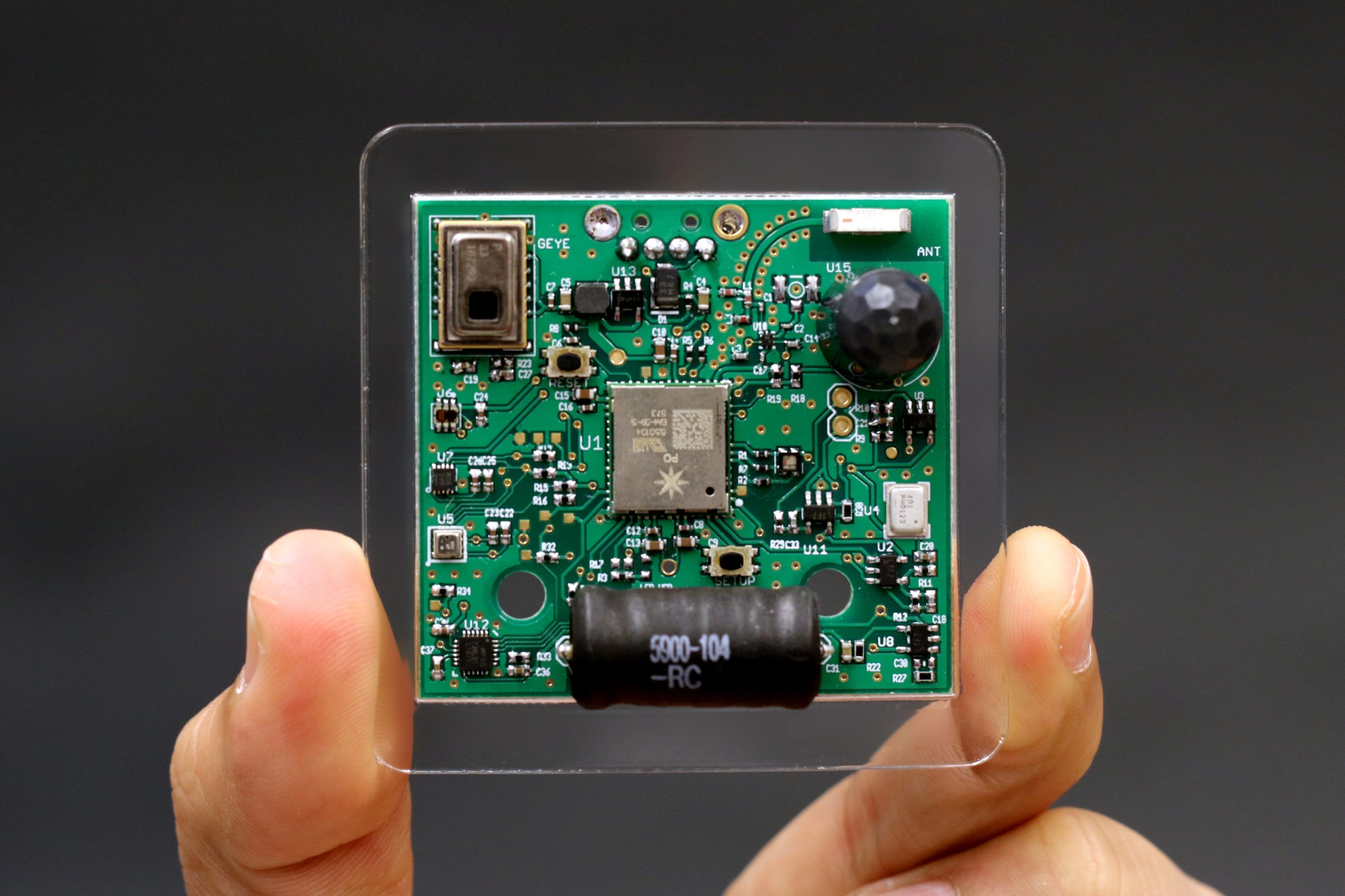

Different off-the-shelf sensors can be combined into a synthetic sensor. These type of sensors are capable of detecting complex events. A good super sensor example can be found here.

An image of the super sensor, including an accelerometer, microphone, magnetometer and more source

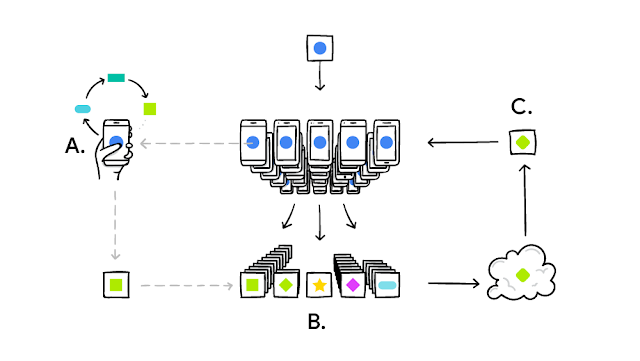

Self-improving products

Devices can make continuous improvements after they are deployed in the field. Google’s Gboard uses a technique called federated learning, that involves every device collecting data and making individual improvements. These individual improvements are aggregated on a central service and every device is then updated with the combined result.

An illustration of federated learning source

Bandwidth

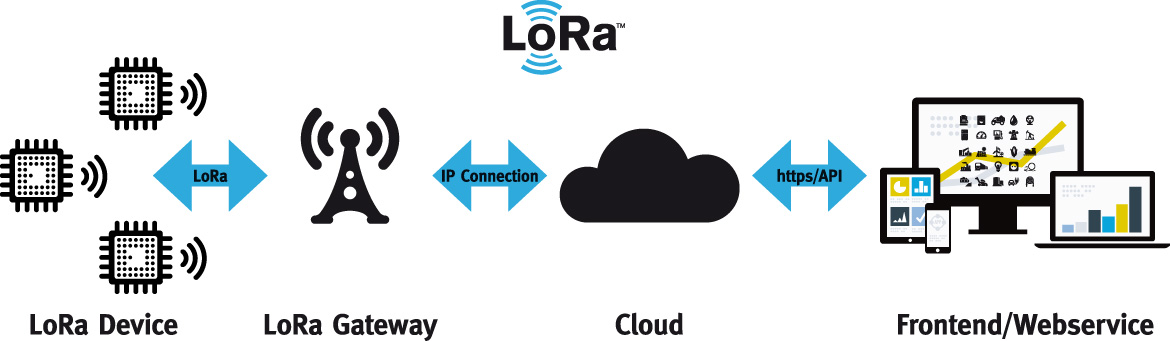

Neural networks can be partitioned such that some layers are evaluated on the device and the rest in the cloud. This enables the balancing of workload and latency. The initial layers of a network can be viewed as feature-abstraction functions. As information propagates through the network, they abstract into high-level features. These high-level features take up much less room than the original data, as a result, making them much easier to transmit over the network. IoT communication technologies, such as Lora and NB-IoT have very limited payload size. Feature-extraction helps to pack the most relevant information in limited payloads.

An illustration of the Lora network setup for IoT source

An illustration of the Lora network setup for IoT source

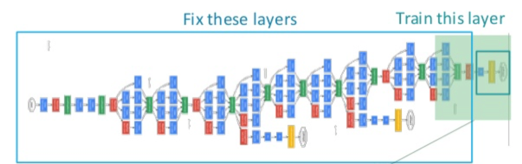

Transfer Learning

In the bandwidth example above, the neural network is distributed between device and cloud. In some cases, it is possible to repurpose the network for a completely different application by just changing the layers in the cloud. The application logic in the cloud is fairly easy to change. This hot-swapping of the network layer enables the same devices to be used for different applications. The practice of modifying part of the network to perform different tasks is an example of transfer learning.

A graphical representation of transfer learning source

Generative Models

Complementary to the bandwidth and transfer learning examples above, with careful engineering, an approximation of the original data can be reconstructed from the features extracted from the data. This may allow edge devices to generate complex outputs with minimal input from the cloud, as well as having applications in data decompression.

Wrapping up

AI could help edge devices to be smarter, improve privacy and bandwidth usage. Though, at the time of writing, there is no known framework that deploys Tensorflow models on MCUs. We created uTensor hoping to catalyze edge computing’s development.

It may still take time before low-power and low-cost AI hardware is as common as MCUs. In addition, as deep learning algorithms are rapidly changing, it makes sense to have a flexible software framework to keep up with AI/machine-learning research.

uTensor will continue to take advantage of the latest software and hardware advancements for example, CMSIS-NN, Arm’s Cortex-M machine learning APIs. These will be integrated into uTensor to ensure the best performance possible on the Arm’s hardware. Developers and researchers will be able to easily test their latest ideas with uTensor, like new algorithms, distributed computing or RTLs.

We hope this project brings anyone who is interested in the field together. After all, collaboration is the key to success at the cutting edge.

More Reading

You need to log in to post a discussion

Discussion topics

| Topic | Replies | Last post |

|---|---|---|

| Cortex-M0, Cortex-M0+, Cortex-M3 Power consumption | 1 |

10 May 2018

by

|