Regarding interrupts, their use and blocking

Introduction

This article is generally written for beginners in the embedded world of microcontrollers. It may also benefit more experienced programmers who are coming from the safety net of writing code to run under an operating system like Windows, MAC OSX, Linux, FreeBSD or whatever.

It introduces the reader to the concept of "program context". Similar in many ways to threads and processes, however, a context is much simpler but, judging from the number of forum posts I see, often misunderstood.

So, lets move on step by step and get a good grasp of what's happening as we grow this example into a full blown debacle of how not to do it (ending of course with examples of best practise to avoid these pitfalls).

I'd also like to say at this point that it may be a long article but by the time you get to the end some of those evil printf()s will become saints again.

In the beginning

Let's start out with a new project in your compiler window. When you create a new project the Mbed cloud compiler conveniently gives a main.cpp that's already set and primed to trip you up and give you a bad day. Here's what it looks like:-

#include "mbed.h"

DigitalOut myled(LED1);

int main() {

while(1) {

myled = 1;

wait(0.2);

myled = 0;

wait(0.2);

}

}

OK, so tripping you up and having a bad day is a bit harsh. The one thing this main.cpp does do is two fold. Having just opened your parcel and popped all the bubbles from the wrapper there's nothing better than seeing you new toy perform. And along with the simplicity of the main.cpp the hit is two fold, seeing it actually work and understanding how it worked. It doesn't get much simpler.

And as analogies go there's nothing worse than watching your children rip open their Christmas presents only to look up and see your spouse mouthing the words "Did you remember to buy the batteries?" If you did, fun is had by all, if you didn't, you know where the shed is.

Instant gratification is great and main.cpp does just that. So, without a do, lets move on and make a small modification to main.cpp, delete what you have and cut'n'paste the following into it:-

#include "mbed.h"

DigitalOut led1(LED1);

int main() {

while(1) {

led1 = 1;

wait(0.05);

led1 = 0;

wait(0.95);

}

}

There are two changes here. The first is we renamed myled to led1. I don't know about you but all those LEDs are mine (or yours if you bought it!), I just like to know which is which. The second change is how the led1 is flashed. We switch it on, wait 0.05seconds, switch it off and wait 0.95seconds then repeat, forever. The idea here is while(1) { ... } should be around 1 second long and the led1 flashes briefly to mark the start of that second.

Go ahead and compile/run it just to make sure all is working well. What you should see is led1 flashing briefly once per second.

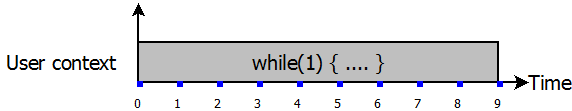

It's at this point I'm going to introduce a simple diagram that represents what's happening right now with your Mbed. Now I know it's not a proper system context diagram but none the less I'm going to call it a Context Diagram. Here it is:-

So, it's like a little graph. On the horizontal axis we have time, in seconds. The little blue boxes represent each time led1 flashes. I haven't give the vertical axis a name yet, more on that later. But the bar at the bottom represents a context. In this case it's "User Context" and that's code that executes inside your while(1) { ... } loop (including any functions and sub-functions that you call from that loop). Also, the diagram only shows the first 9 seconds. All the code I'm going to do is designed to mess things up within this 9 seconds. I'm going to deliberately exaggerate things to make sure of that!

OK, this is all about interrupts and making a mess of it so lets add our first interrupt and, err, make a mess of it. Here's the code, delete your main.cpp contents and paste this in:-

#include "mbed.h"

Timeout to1;

DigitalOut led1(LED1);

DigitalOut led2(LED2);

void cb1(void) {

led2 = 1;

wait(3);

led2 = 0;

}

int main() {

to1.attach(&cb1, 2);

while(1) {

led1 = 1;

wait(0.05);

led1 = 0;

wait(0.95);

}

}

If you've just compiled and run that sample you'll have noticed that 2 seconds after reset, Timeout to1 was triggered and the callback function cb1() was executed. This function switched on led2, waited 3 seconds, switch it off again and returned. That bit should be fairly obvious. But what's not so obvious is what happened to led1 while led2 was on. Go on, press reset again and watch led1, what happens?

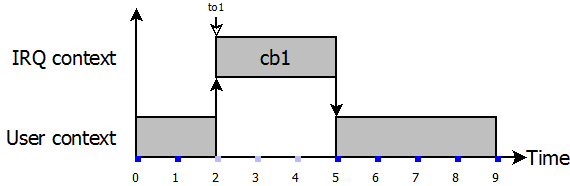

Yup, it doesn't flash! Now, lets return to our context diagram and "spell it out" with a picture:-

In the diagram above I have greyed out the "blue boxes" at t = 2, 3 and 4 to show that led1 didn't flash. As you can see, when timeout to1 triggered and cb1() was called your program's context switch from User Context to Interrupt Context. And along with it all the CPUs execution time was spent handing the Interrupt Service Routine (ISR). As a result, your while(1) loop was suspended and didn't execute. That's why led1 stopped flashing.

Now, you might have just done all this and be sat there thinking "well, it's obvious". But the point is, it's not as obvious as it may first appear. Sure it is here but that's because I put a whacking wait(3) in the callback. The point I'm trying to make is, spending too long inside callbacks, which are usually in interrupt context, will end up ruining your day. To some the penny about why printf() suddenly breaks your program may well be dropping. We'll come back to this later. Let's move on and make an even bigger mess just to really get home the nature of interrupts.

We're now going to add a second interrupt callback called to2 and give it it's own LED and it's own callback handler, cb2(). Here's the code, as usual, wipe the contents of your main.cpp and paste this in:-

main.cpp

#include "mbed.h"

Timeout to1;

Timeout to2;

DigitalOut led1(LED1);

DigitalOut led2(LED2);

DigitalOut led3(LED3);

void cb1(void) {

led2 = 1;

wait(3);

led2 = 0;

}

void cb2(void) {

led3 = 1;

wait(3);

led3 = 0;

}

int main() {

to1.attach(&cb1, 2);

to2.attach(&cb2, 3);

while(1) {

led1 = 1;

wait(0.05);

led1 = 0;

wait(0.95);

}

}

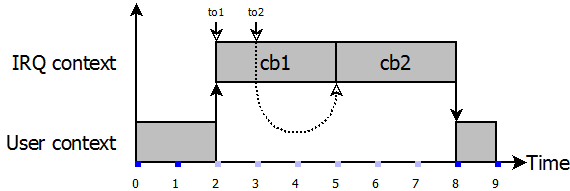

Again we see things happening with the LEDs. As usual, while in interrupt context led1 stopped flashing as before and it stopped for 6 seconds this time. But notice something else here. Our new timeout t2 was set to trigger three seconds after reset. That was one second after to1 triggered. So why didn't led3 come on one second after by led2? What actually happened is led2 came on for 3 seconds and then, when it went off, led3 came on for 3 seconds! Lets take a look at our new context diagram and see what's going on.

What should be obvious from this now is interrupts don't interrupt currently executing interrupts. They "stack". As can be seen from the diagram above, although to2 triggered at t = 3 execution of cb2() didn't begin until t = 5 after cb1() completes and returns.

So, after all this exaggeration it's time to turn our attention to the real and programs you write. This first and most obvious rule is:-

Rule 1

Don't use wait() in callbacks.

wait() is really there to use during the initialisation phase of your program, the part before entering the while(1) loop. There are other times it's useful but restrict them to User Context. Just don't use wait() in an ISR. (Note, there does exist wait_us(), the wait for a specified number of microseconds. This isn't nearly as bad. Just use sensibly though and avoid wait() and wait_ms()).

But now it's time for rule number 2 and the less obvious one. Use printf() in a callback at your peril. Why? It's the Swiss army knife of debugging I hear you shout. Well, lets just disassemble printf(const char *format, ...);

- printf() doesn't have a magic buffer. It has to malloc() one.

- It has to guess at what size buffer to use, often twice the size of format, so that's a strlen() thrown in to get the length of format.

- If it overruns that 2x buffer it has to remalloc(), memcpy() and continue.

- Once it has something to send in steps the real killer hidden within printf(), it's putc(). printf() must loop over the buffer and putc() each character.

- And with every malloc(), there's a free() just to top it off.

As said above, putc() is the hidden killer lurking to trip the unsuspecting developer. Let's take a look at what putc() does:-

- Is UART THRE register empty (read LSR to find out)

- No? wait until it is.

- Yes, put the character/byte into THRE.

- Go back to 1 above until all characters are sent.

Now, out of the box Serial the baud rate is 9600baud. So the above loop takes approx 1ms for each loop, that's each character in printf()'s malloc()'ed buffer. Doesn't take long to realise that the more you ask of printf() inside a callback, the longer it takes for that callback to return and the longer you block the system.

Quite often a new programmer will find that it's ok initially. Their debugging pops out on their terminal. But as their project grows and the system is expecting to do more and more stuff eventually you reach a tipping point where you are simply spending to much time waiting inside a callback. As as shown early, interrupts stack. So when you return from a lengthy callback (thanks to printf()) you may well find yourself going straight back into it again because another callback has been made with another darn printf() in it too. Game Over.

Which brings us to rule number 2.

Rule 2

Avoid printf() in callbacks. If you do use them for debugging a quick variable, remember to remove the darn thing! And if you're entire program relies on a printf() in a callback by design rather than as a quick debug, think about redesigning your program to use better IO techniques. Which, lucky enough are coming up next!

Mitigating the issues

So it's time to look at ways of removing these unsightly warts from callbacks. As with any programming language or system, there's more than one way to do things. I'm just going to show one example of my preferred way of dealing with this issue.

This technique is basically all about trying to keep yourself in User Context for the longest period possible and only leaving it for very brief "exceptions".

In the above example so far what I was trying to do is this (this is my specification).

- led1: flash brief one per second, EVERY second.

- led2: On at t = 2 and off at t = 5

- led3: On at t = 3 and off at t = 6

Lets look at how I would approach this problem, here's the code:-

main.cpp

#include "mbed.h"

Timeout to1;

Timeout to2;

DigitalOut led1(LED1);

DigitalOut to1_led(LED2);

DigitalOut to2_led(LED3);

bool to1triggered = false;

bool to2triggered = false;

void cb1(void) {

to1triggered = true;

}

void to1handle(void) {

if (to1_led == 0) {

to1_led = 1;

to1.detach();

to1.attach(&cb1, 3);

}

else {

to1_led = 0;

to1.detach();

}

}

void cb2(void) {

to2triggered = true;

}

void to2handle(void) {

if (to2_led == 0) {

to2_led = 1;

to2.detach();

to2.attach(&cb2, 3);

}

else {

to2_led = 0;

to2.detach();

}

}

int main() {

to1.attach(&cb1, 2);

to2.attach(&cb2, 3);

while(1) {

if (to1triggered) {

to1triggered = false;

to1handle();

}

if (to2triggered) {

to2triggered = false;

to2handle();

}

led1 = 1;

wait(0.05);

led1 = 0;

wait(0.95);

}

}

Paste that into your compiler and run it. You'll see it does in fact work. Keen eyed observers will however have noticed the huge bug in this program. We'll return to that shortly, I left it like it is not to add too much in one go.

So, what's new here? Mainly the introduction of two global bool vars to1triggered and to2triggered. The main point of these is when a callback is made we set these to true. We don't do anything else. That's fast, very fast. Compared to the 9 seconds of User Context we have, these callbacks are pretty much instant requiring just a few microseconds at most.

Now, in our User Context while(1) loop we test these bools. They are effectively acting as flags to your main program to tell it an event occurred that must be handled. So we do, we have functions dedicated to handling them. Notice each of these reschedules a new future callback based on whether the led is on or not. If the led is off, switch it on and reschedule a new timeout to switch it off later. Simples :) (Bad Russian accent optional).

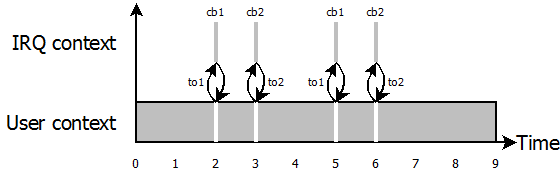

Lets have a look at the context diagram for this program:-

Now this diagram isn't to scale. If it were you wouldn't be able to see to1/cb1 and to2/cb2, a pixel width of your screen is far to wide to represent the real time spent in the callbacks. Likewise if we scaled to the width they are shown the t = 9 would be by the bus stop somewhere down the your road, far to wide for your monitor :)

The important point here is the amount of time you have available in User Context. You could be using it for more useful things like calculating PI or searching for extraterrestrial life with SETI. The point is, it's your time. Those LEDs will happily get on with their task while you get on with other tasks.

So, let's come back to that bug I mentioned earlier. It's here:-

led1 = 1;

wait(0.05);

led1 = 0;

wait(0.95);

The only reason this program works is because I was careful to line up the event times with each other. Change the values of those wait()s and it'll all fall apart. So the answer is, yes! More timers. Have you noticed the Mbed library says you can have as many as you want. Useful! Shortly I'll show you how to fix this. But first, as always, a word of advice. This sort of technique is useful for event driven systems. If your event handlers themselves start to get too long then you can run into trouble. For example, if you call a function to go help find another decimal place of PI chances are to1triggered and to2triggered won't be getting tested for true anytime soon. So think on when designing your program where you'll be spending time, where you need to service events etc.

Now, lets move on to the final example. This example handles the specification for the LEDs we outlined earlier AND lets you calculate PI without a care. Both will live in harmony. Whats more, we extend LED2 and LED3 so they repeat their sequence rather than just coming on once and never again.

main.cpp

#include "mbed.h"

Ticker tled1on;

Timeout tled1off;

Timeout to1;

Timeout to2;

DigitalOut led1(LED1);

DigitalOut to1_led(LED2);

DigitalOut to2_led(LED3);

void cb1(void) {

if (to1_led == 0) {

to1_led = 1;

}

else {

to1_led = 0;

}

// Reschedule a new event.

to1.detach();

to1.attach(&cb1, 3);

}

void cb2(void) {

if (to2_led == 0) {

to2_led = 1;

}

else {

to2_led = 0;

}

// Reschedule a new event.

to2.detach();

to2.attach(&cb2, 3);

}

void tled1off_cb(void) {

led1 = 0;

}

void tled1on_cb(void) {

led1 = 1;

tled1off.detach();

tled1off.attach(&tled1off_cb, 0.05);

}

int main() {

led1 = 1;

tled1off.attach(&tled1on_cb, 0.05);

tled1on.attach(&tled1on_cb, 1);

to1.attach(&cb1, 2);

to2.attach(&cb2, 3);

while(1) {

// Calculate PI here as we have so much time :)

// It's like riding a bike with no hands, who's

// steering?! :)

}

}

There is one point to note. The LPC17xx manual refers to "modes" and there are two of them, Thread Mode and Handler Mode. Basically, Thread Mode is "user context" and Handler Mode is "interrupt context". I just prefer the notion of "executing in a context". So if you are reading the manual then you'll know what thread and handler mode are.

And lastly, not covered here is interrupt priorities. The LPC1768 does allow for interrupts to take priority which does in fact allow one interrupt to preempt a currently executing ISR rather than stack amongst other tricks. However, along with SVC and PendSV I intend to cover these more advanced topics in a future article.

Hope you enjoyed all that and if you are here reading this line:-

- Thanks for taking the time!

- Enjoy your programming experience, it's supposed to be fun!

20 comments on Regarding interrupts, their use and blocking:

Please log in to post comments.

Excellent introduction to the world of interrupts and calling contexts. Thanks very much for posting it and I look forward to any future articles.