Synchronous wireless star LoRa network, central device.

Dependencies: SX127x sx12xx_hal

radio chip selection

Radio chip driver is not included, allowing choice of radio device.

If you're using SX1272 or SX1276, then import sx127x driver into your program.

if you're using SX1261 or SX1262, then import sx126x driver into your program.

if you're using SX1280, then import sx1280 driver into your program.

If you're using NAmote72 or Murata discovery, then you must import only sx127x driver.

Alternate to this project gateway running on raspberry pi can be used as gateway.

LoRaWAN on single radio channel

Synchronous Star Network

This project acts as central node for LoRaWAN-like network operating on single radio channel. Intended for use where network infrastructure would never exist due to cost and/or complexity of standard network. This project uses the class-B method of beacon generation to synchronize the end nodes with the gateway. OTA mode must be used. End-node will be allocated an uplink time slot upon joining. End node may transmit uplink at this assigned timeslot, if it desires to do so. This time slot is always referenced to the beacon sent by gateway.

LoRaWAN server is not necessary. All network control is implemented by this project. User can observe network activity on the mbed serial port. Downlinks can be scheduled using command on serial port.

This implementation must not be used on radio channels requiring duty-cycle transmit limiting.

alterations from LoRaWAN specification

This mode of operation uses a single datarate on single radio channel. ADR is not implemented, because datarate cannot be changed. OTA mode must be used. When join-accept is sent by gateway, it will have appended (instead of CFlist) the beacon timing answer to inform of when next beacon occurs, and two timing values: the time slot allocated to this end-node and the periodicity of the network. Periodicity means how often the end-node may again transmit.  Beacon is sent for purpose of providing timing reference to end-nodes. The beacon payload may contain a broadcast command to end nodes. Time value is not sent in beacon payload. The time at which beacon is sent provides timing reference: every 128 seconds as standard.

Beacon is sent for purpose of providing timing reference to end-nodes. The beacon payload may contain a broadcast command to end nodes. Time value is not sent in beacon payload. The time at which beacon is sent provides timing reference: every 128 seconds as standard.

Rx2 receive window is not implemented. Only Rx1 is used because a single radio channel is used. Rx1 delay is reduced to 100 milliseconds. Original LoRaWAN has 1000 millisecond Rx1 delay to accommodate internet latency.

LoRaWAN standard class-B beacon requires GPS timing reference. This implementation does not use GPS, instead a hardware timer peripheral generates interrupts to send beacons. Absolute accuracy is not required, only relative crystal drift between gateway and end-nodes is considered.

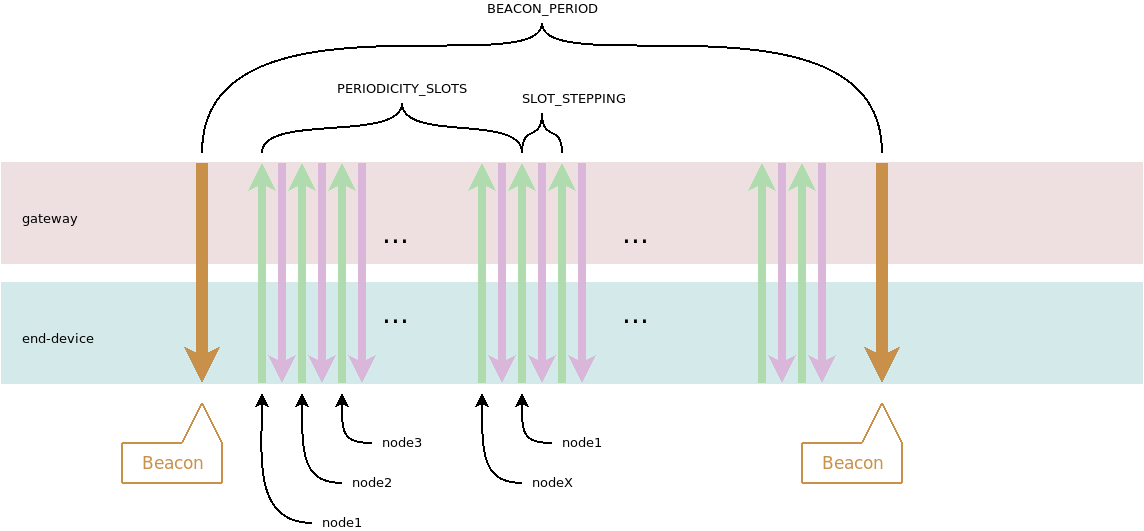

Timing values are always specified as 30ms per step as in LoRaWAN standard. Each beacon period has 4096 30ms steps per beacon period.

join OTA procedure

The join procedure has changed the join-accept delay to 100 milliseconds (standard is 5 seconds). This allows end-node to hunt for gateway on several channels during joining. When gateway starts, it will scan available channels for the optimal choice based on ambient noise on the channels. End node will retry join request until it finds the gateway. Gateway might change channel during operation if it deems current channel too busy.

configuration of network

End nodes must be provisioned by editing file Comissioning.h. The array motes lists every end node permitted on network. It contains appEui, devEUI and appKey just as specified in standard LoRaWAN. All provisioning is hard-coded; changing provisioning requires reprogramming gateway. When changing number of motes, N_MOTES definition must be changed in lorawan.h.

lorawan.h

#define N_MOTES 8 extern ota_mote_t motes[N_MOTES]; /* from Comissioning.h */

configuring number of end-nodes vs transmit rate

Trade-off can be selected between number of end-nodes on network vs. how often each end-node can transmit.

In this example, where DR_13 is SF7 at 500KHz:

lorawan.cpp

#elif (LORAMAC_DEFAULT_DATARATE == DR_13)

#define TX_SLOT_STEPPING 8 //approx 0.25 seconds

#define PERIODICITY_SLOTS (TX_SLOT_STEPPING * 6)

#endif

Here, each end-node is given time of 240ms = 8 * 30ms; accommodating maximum payload length for both uplink and downlink.

6 in this code is the maximum count of end nodes using this gateway. Each end-node can transmit every 1.44 seconds, in this example.

If you wanted to change 6 to 20 end-nodes, each would be able to use network every 4.8 seconds.

Another example: If you wanted to use DR_12 = SF8, you would have approx 2.5 to 3dB more gain compared to SF7, but each end-node must be given double time, resulting in 20 nodes able to use network every 9.6 seconds at DR_12.

network capacity limitation

The number of end-nodes which can be supported is determined by number of SLOT_STEPPING's which can occur during BEACON_PERIOD. Beacon guard is implemented same as standard LoRaWAN, which is 3 seconds prior to beacon start and 2.12 seconds after beacon start, which gives 122.88 seconds for network traffic for each beacon period.

gateway configuration

spreading factor is declared at #define LORAMAC_DEFAULT_DATARATE in lorawan.h, valid rates are DR_8 to DR_13 (sf12 to sf7). In the end-node, the same definition must be configured in LoRaMac-definitions.h. This network operates at this constant datarate for all packets.

Band plan can be selected by defining USE_BAND_* in lorawan.h. 434MHz can be used on SX1276 shield. TypeABZ module and sx1272 only support 800/900MHz channels band.

end-node provisioning

Security permits only matching DevEUI / AppEui to join the network, due to unique AES root key for each end device; in this case the DevEUI must be programmed in advance into gateway. However, if the same AES root key could be used for each end device , then any number of end devices could be added at a later time, without checking DevEUI on the gateway when an end device joins the network. On the gateway, the end device join acceptance is performed in file lorawan.cpp LoRaWan::parse_receive() where MType == MTYPE_JOIN_REQ. A memcmp() is performed on both DevEUI and AppEUI.

If you wish to allow any DevEUI to join, define ANY_DEVEUI at top of lorawan.cpp . In this configuration, all end devices must use same AppEUI and AES key. N_MOTES must be defined to the maximum number of end devices expected. Test end device by commenting BoardGetUniqueId() in end node, and replace DevEui[] with 8 arbitrary bytes to verify gateway permits any DevEUI to join.

RAM usage

For gateway CPU, recommend to consider larger RAM size depending on number of end devices required. ota_motes_t has size of 123 bytes. Each end device has one of these, however if less RAM usage is required for more end-devices, the MAC command queue size may be reduced.

hardware support

The radio driver supports both SX1272 and SX1276, sx126x kit, sx126x radio-only shield, and sx128x 2.4GHz.. The selection of radio chip type is made by your choice of importing radio driver library.

Beacon generation requires low power ticker to be clocked from crystal, not internal RC oscillator.

Gateway Serial Interface

Gateway serial port operates at 115200bps.

| command | argument | description |

|---|---|---|

list | - | list joined end nodes |

? | - | list available commands |

dl | <mote-hex-dev-addr> <hex-payload> | send downlink, sent after uplink received |

gpo | <mote-hex-dev-addr> <0 or 1> | set output PC6 pin level on end device |

b | 32bit hex value | set beacon payload to be sent at next beacon |

. (period) | - | print current status |

op | dBm | configure TX power of gateway |

sb | count | skip sending beacons, for testing end node |

f | hex devAddr | printer filtering, show only single end node |

hm | - | print filtering, hide MAC layer printing |

hp | - | print filtering, hide APP layer printing |

sa | - | print filtering, show all, undo hm and hp |

Any received uplink will be printed with DevAddr and decrypted payload.

gladman_aes.cpp

- Committer:

- dudmuck

- Date:

- 2017-05-18

- Revision:

- 0:2ff18de8d48b

File content as of revision 0:2ff18de8d48b:

/*

---------------------------------------------------------------------------

Copyright (c) 1998-2008, Brian Gladman, Worcester, UK. All rights reserved.

LICENSE TERMS

The redistribution and use of this software (with or without changes)

is allowed without the payment of fees or royalties provided that:

1. source code distributions include the above copyright notice, this

list of conditions and the following disclaimer;

2. binary distributions include the above copyright notice, this list

of conditions and the following disclaimer in their documentation;

3. the name of the copyright holder is not used to endorse products

built using this software without specific written permission.

DISCLAIMER

This software is provided 'as is' with no explicit or implied warranties

in respect of its properties, including, but not limited to, correctness

and/or fitness for purpose.

---------------------------------------------------------------------------

Issue 09/09/2006

This is an AES implementation that uses only 8-bit byte operations on the

cipher state (there are options to use 32-bit types if available).

The combination of mix columns and byte substitution used here is based on

that developed by Karl Malbrain. His contribution is acknowledged.

*/

/* define if you have a fast memcpy function on your system */

#if 0

# define HAVE_MEMCPY

# include <string.h>

# if defined( _MSC_VER )

# include <intrin.h>

# pragma intrinsic( memcpy )

# endif

#endif

#include <stdlib.h>

#include <stdint.h>

/* define if you have fast 32-bit types on your system */

#if ( __CORTEX_M != 0 ) // if Cortex is different from M0/M0+

# define HAVE_UINT_32T

#endif

/* define if you don't want any tables */

#if 1

# define USE_TABLES

#endif

/* On Intel Core 2 duo VERSION_1 is faster */

/* alternative versions (test for performance on your system) */

#if 1

# define VERSION_1

#endif

#include "gladman_aes.h"

//#if defined( HAVE_UINT_32T )

// typedef unsigned long uint32_t;

//#endif

/* functions for finite field multiplication in the AES Galois field */

#define WPOLY 0x011b

#define BPOLY 0x1b

#define DPOLY 0x008d

#define f1(x) (x)

#define f2(x) ((x << 1) ^ (((x >> 7) & 1) * WPOLY))

#define f4(x) ((x << 2) ^ (((x >> 6) & 1) * WPOLY) ^ (((x >> 6) & 2) * WPOLY))

#define f8(x) ((x << 3) ^ (((x >> 5) & 1) * WPOLY) ^ (((x >> 5) & 2) * WPOLY) \

^ (((x >> 5) & 4) * WPOLY))

#define d2(x) (((x) >> 1) ^ ((x) & 1 ? DPOLY : 0))

#define f3(x) (f2(x) ^ x)

#define f9(x) (f8(x) ^ x)

#define fb(x) (f8(x) ^ f2(x) ^ x)

#define fd(x) (f8(x) ^ f4(x) ^ x)

#define fe(x) (f8(x) ^ f4(x) ^ f2(x))

#if defined( USE_TABLES )

#define sb_data(w) { /* S Box data values */ \

w(0x63), w(0x7c), w(0x77), w(0x7b), w(0xf2), w(0x6b), w(0x6f), w(0xc5),\

w(0x30), w(0x01), w(0x67), w(0x2b), w(0xfe), w(0xd7), w(0xab), w(0x76),\

w(0xca), w(0x82), w(0xc9), w(0x7d), w(0xfa), w(0x59), w(0x47), w(0xf0),\

w(0xad), w(0xd4), w(0xa2), w(0xaf), w(0x9c), w(0xa4), w(0x72), w(0xc0),\

w(0xb7), w(0xfd), w(0x93), w(0x26), w(0x36), w(0x3f), w(0xf7), w(0xcc),\

w(0x34), w(0xa5), w(0xe5), w(0xf1), w(0x71), w(0xd8), w(0x31), w(0x15),\

w(0x04), w(0xc7), w(0x23), w(0xc3), w(0x18), w(0x96), w(0x05), w(0x9a),\

w(0x07), w(0x12), w(0x80), w(0xe2), w(0xeb), w(0x27), w(0xb2), w(0x75),\

w(0x09), w(0x83), w(0x2c), w(0x1a), w(0x1b), w(0x6e), w(0x5a), w(0xa0),\

w(0x52), w(0x3b), w(0xd6), w(0xb3), w(0x29), w(0xe3), w(0x2f), w(0x84),\

w(0x53), w(0xd1), w(0x00), w(0xed), w(0x20), w(0xfc), w(0xb1), w(0x5b),\

w(0x6a), w(0xcb), w(0xbe), w(0x39), w(0x4a), w(0x4c), w(0x58), w(0xcf),\

w(0xd0), w(0xef), w(0xaa), w(0xfb), w(0x43), w(0x4d), w(0x33), w(0x85),\

w(0x45), w(0xf9), w(0x02), w(0x7f), w(0x50), w(0x3c), w(0x9f), w(0xa8),\

w(0x51), w(0xa3), w(0x40), w(0x8f), w(0x92), w(0x9d), w(0x38), w(0xf5),\

w(0xbc), w(0xb6), w(0xda), w(0x21), w(0x10), w(0xff), w(0xf3), w(0xd2),\

w(0xcd), w(0x0c), w(0x13), w(0xec), w(0x5f), w(0x97), w(0x44), w(0x17),\

w(0xc4), w(0xa7), w(0x7e), w(0x3d), w(0x64), w(0x5d), w(0x19), w(0x73),\

w(0x60), w(0x81), w(0x4f), w(0xdc), w(0x22), w(0x2a), w(0x90), w(0x88),\

w(0x46), w(0xee), w(0xb8), w(0x14), w(0xde), w(0x5e), w(0x0b), w(0xdb),\

w(0xe0), w(0x32), w(0x3a), w(0x0a), w(0x49), w(0x06), w(0x24), w(0x5c),\

w(0xc2), w(0xd3), w(0xac), w(0x62), w(0x91), w(0x95), w(0xe4), w(0x79),\

w(0xe7), w(0xc8), w(0x37), w(0x6d), w(0x8d), w(0xd5), w(0x4e), w(0xa9),\

w(0x6c), w(0x56), w(0xf4), w(0xea), w(0x65), w(0x7a), w(0xae), w(0x08),\

w(0xba), w(0x78), w(0x25), w(0x2e), w(0x1c), w(0xa6), w(0xb4), w(0xc6),\

w(0xe8), w(0xdd), w(0x74), w(0x1f), w(0x4b), w(0xbd), w(0x8b), w(0x8a),\

w(0x70), w(0x3e), w(0xb5), w(0x66), w(0x48), w(0x03), w(0xf6), w(0x0e),\

w(0x61), w(0x35), w(0x57), w(0xb9), w(0x86), w(0xc1), w(0x1d), w(0x9e),\

w(0xe1), w(0xf8), w(0x98), w(0x11), w(0x69), w(0xd9), w(0x8e), w(0x94),\

w(0x9b), w(0x1e), w(0x87), w(0xe9), w(0xce), w(0x55), w(0x28), w(0xdf),\

w(0x8c), w(0xa1), w(0x89), w(0x0d), w(0xbf), w(0xe6), w(0x42), w(0x68),\

w(0x41), w(0x99), w(0x2d), w(0x0f), w(0xb0), w(0x54), w(0xbb), w(0x16) }

#define isb_data(w) { /* inverse S Box data values */ \

w(0x52), w(0x09), w(0x6a), w(0xd5), w(0x30), w(0x36), w(0xa5), w(0x38),\

w(0xbf), w(0x40), w(0xa3), w(0x9e), w(0x81), w(0xf3), w(0xd7), w(0xfb),\

w(0x7c), w(0xe3), w(0x39), w(0x82), w(0x9b), w(0x2f), w(0xff), w(0x87),\

w(0x34), w(0x8e), w(0x43), w(0x44), w(0xc4), w(0xde), w(0xe9), w(0xcb),\

w(0x54), w(0x7b), w(0x94), w(0x32), w(0xa6), w(0xc2), w(0x23), w(0x3d),\

w(0xee), w(0x4c), w(0x95), w(0x0b), w(0x42), w(0xfa), w(0xc3), w(0x4e),\

w(0x08), w(0x2e), w(0xa1), w(0x66), w(0x28), w(0xd9), w(0x24), w(0xb2),\

w(0x76), w(0x5b), w(0xa2), w(0x49), w(0x6d), w(0x8b), w(0xd1), w(0x25),\

w(0x72), w(0xf8), w(0xf6), w(0x64), w(0x86), w(0x68), w(0x98), w(0x16),\

w(0xd4), w(0xa4), w(0x5c), w(0xcc), w(0x5d), w(0x65), w(0xb6), w(0x92),\

w(0x6c), w(0x70), w(0x48), w(0x50), w(0xfd), w(0xed), w(0xb9), w(0xda),\

w(0x5e), w(0x15), w(0x46), w(0x57), w(0xa7), w(0x8d), w(0x9d), w(0x84),\

w(0x90), w(0xd8), w(0xab), w(0x00), w(0x8c), w(0xbc), w(0xd3), w(0x0a),\

w(0xf7), w(0xe4), w(0x58), w(0x05), w(0xb8), w(0xb3), w(0x45), w(0x06),\

w(0xd0), w(0x2c), w(0x1e), w(0x8f), w(0xca), w(0x3f), w(0x0f), w(0x02),\

w(0xc1), w(0xaf), w(0xbd), w(0x03), w(0x01), w(0x13), w(0x8a), w(0x6b),\

w(0x3a), w(0x91), w(0x11), w(0x41), w(0x4f), w(0x67), w(0xdc), w(0xea),\

w(0x97), w(0xf2), w(0xcf), w(0xce), w(0xf0), w(0xb4), w(0xe6), w(0x73),\

w(0x96), w(0xac), w(0x74), w(0x22), w(0xe7), w(0xad), w(0x35), w(0x85),\

w(0xe2), w(0xf9), w(0x37), w(0xe8), w(0x1c), w(0x75), w(0xdf), w(0x6e),\

w(0x47), w(0xf1), w(0x1a), w(0x71), w(0x1d), w(0x29), w(0xc5), w(0x89),\

w(0x6f), w(0xb7), w(0x62), w(0x0e), w(0xaa), w(0x18), w(0xbe), w(0x1b),\

w(0xfc), w(0x56), w(0x3e), w(0x4b), w(0xc6), w(0xd2), w(0x79), w(0x20),\

w(0x9a), w(0xdb), w(0xc0), w(0xfe), w(0x78), w(0xcd), w(0x5a), w(0xf4),\

w(0x1f), w(0xdd), w(0xa8), w(0x33), w(0x88), w(0x07), w(0xc7), w(0x31),\

w(0xb1), w(0x12), w(0x10), w(0x59), w(0x27), w(0x80), w(0xec), w(0x5f),\

w(0x60), w(0x51), w(0x7f), w(0xa9), w(0x19), w(0xb5), w(0x4a), w(0x0d),\

w(0x2d), w(0xe5), w(0x7a), w(0x9f), w(0x93), w(0xc9), w(0x9c), w(0xef),\

w(0xa0), w(0xe0), w(0x3b), w(0x4d), w(0xae), w(0x2a), w(0xf5), w(0xb0),\

w(0xc8), w(0xeb), w(0xbb), w(0x3c), w(0x83), w(0x53), w(0x99), w(0x61),\

w(0x17), w(0x2b), w(0x04), w(0x7e), w(0xba), w(0x77), w(0xd6), w(0x26),\

w(0xe1), w(0x69), w(0x14), w(0x63), w(0x55), w(0x21), w(0x0c), w(0x7d) }

#define mm_data(w) { /* basic data for forming finite field tables */ \

w(0x00), w(0x01), w(0x02), w(0x03), w(0x04), w(0x05), w(0x06), w(0x07),\

w(0x08), w(0x09), w(0x0a), w(0x0b), w(0x0c), w(0x0d), w(0x0e), w(0x0f),\

w(0x10), w(0x11), w(0x12), w(0x13), w(0x14), w(0x15), w(0x16), w(0x17),\

w(0x18), w(0x19), w(0x1a), w(0x1b), w(0x1c), w(0x1d), w(0x1e), w(0x1f),\

w(0x20), w(0x21), w(0x22), w(0x23), w(0x24), w(0x25), w(0x26), w(0x27),\

w(0x28), w(0x29), w(0x2a), w(0x2b), w(0x2c), w(0x2d), w(0x2e), w(0x2f),\

w(0x30), w(0x31), w(0x32), w(0x33), w(0x34), w(0x35), w(0x36), w(0x37),\

w(0x38), w(0x39), w(0x3a), w(0x3b), w(0x3c), w(0x3d), w(0x3e), w(0x3f),\

w(0x40), w(0x41), w(0x42), w(0x43), w(0x44), w(0x45), w(0x46), w(0x47),\

w(0x48), w(0x49), w(0x4a), w(0x4b), w(0x4c), w(0x4d), w(0x4e), w(0x4f),\

w(0x50), w(0x51), w(0x52), w(0x53), w(0x54), w(0x55), w(0x56), w(0x57),\

w(0x58), w(0x59), w(0x5a), w(0x5b), w(0x5c), w(0x5d), w(0x5e), w(0x5f),\

w(0x60), w(0x61), w(0x62), w(0x63), w(0x64), w(0x65), w(0x66), w(0x67),\

w(0x68), w(0x69), w(0x6a), w(0x6b), w(0x6c), w(0x6d), w(0x6e), w(0x6f),\

w(0x70), w(0x71), w(0x72), w(0x73), w(0x74), w(0x75), w(0x76), w(0x77),\

w(0x78), w(0x79), w(0x7a), w(0x7b), w(0x7c), w(0x7d), w(0x7e), w(0x7f),\

w(0x80), w(0x81), w(0x82), w(0x83), w(0x84), w(0x85), w(0x86), w(0x87),\

w(0x88), w(0x89), w(0x8a), w(0x8b), w(0x8c), w(0x8d), w(0x8e), w(0x8f),\

w(0x90), w(0x91), w(0x92), w(0x93), w(0x94), w(0x95), w(0x96), w(0x97),\

w(0x98), w(0x99), w(0x9a), w(0x9b), w(0x9c), w(0x9d), w(0x9e), w(0x9f),\

w(0xa0), w(0xa1), w(0xa2), w(0xa3), w(0xa4), w(0xa5), w(0xa6), w(0xa7),\

w(0xa8), w(0xa9), w(0xaa), w(0xab), w(0xac), w(0xad), w(0xae), w(0xaf),\

w(0xb0), w(0xb1), w(0xb2), w(0xb3), w(0xb4), w(0xb5), w(0xb6), w(0xb7),\

w(0xb8), w(0xb9), w(0xba), w(0xbb), w(0xbc), w(0xbd), w(0xbe), w(0xbf),\

w(0xc0), w(0xc1), w(0xc2), w(0xc3), w(0xc4), w(0xc5), w(0xc6), w(0xc7),\

w(0xc8), w(0xc9), w(0xca), w(0xcb), w(0xcc), w(0xcd), w(0xce), w(0xcf),\

w(0xd0), w(0xd1), w(0xd2), w(0xd3), w(0xd4), w(0xd5), w(0xd6), w(0xd7),\

w(0xd8), w(0xd9), w(0xda), w(0xdb), w(0xdc), w(0xdd), w(0xde), w(0xdf),\

w(0xe0), w(0xe1), w(0xe2), w(0xe3), w(0xe4), w(0xe5), w(0xe6), w(0xe7),\

w(0xe8), w(0xe9), w(0xea), w(0xeb), w(0xec), w(0xed), w(0xee), w(0xef),\

w(0xf0), w(0xf1), w(0xf2), w(0xf3), w(0xf4), w(0xf5), w(0xf6), w(0xf7),\

w(0xf8), w(0xf9), w(0xfa), w(0xfb), w(0xfc), w(0xfd), w(0xfe), w(0xff) }

static const uint8_t sbox[256] = sb_data(f1);

#if defined( AES_DEC_PREKEYED )

static const uint8_t isbox[256] = isb_data(f1);

#endif

static const uint8_t gfm2_sbox[256] = sb_data(f2);

static const uint8_t gfm3_sbox[256] = sb_data(f3);

#if defined( AES_DEC_PREKEYED )

static const uint8_t gfmul_9[256] = mm_data(f9);

static const uint8_t gfmul_b[256] = mm_data(fb);

static const uint8_t gfmul_d[256] = mm_data(fd);

static const uint8_t gfmul_e[256] = mm_data(fe);

#endif

#define s_box(x) sbox[(x)]

#if defined( AES_DEC_PREKEYED )

#define is_box(x) isbox[(x)]

#endif

#define gfm2_sb(x) gfm2_sbox[(x)]

#define gfm3_sb(x) gfm3_sbox[(x)]

#if defined( AES_DEC_PREKEYED )

#define gfm_9(x) gfmul_9[(x)]

#define gfm_b(x) gfmul_b[(x)]

#define gfm_d(x) gfmul_d[(x)]

#define gfm_e(x) gfmul_e[(x)]

#endif

#else

/* this is the high bit of x right shifted by 1 */

/* position. Since the starting polynomial has */

/* 9 bits (0x11b), this right shift keeps the */

/* values of all top bits within a byte */

static uint8_t hibit(const uint8_t x)

{ uint8_t r = (uint8_t)((x >> 1) | (x >> 2));

r |= (r >> 2);

r |= (r >> 4);

return (r + 1) >> 1;

}

/* return the inverse of the finite field element x */

static uint8_t gf_inv(const uint8_t x)

{ uint8_t p1 = x, p2 = BPOLY, n1 = hibit(x), n2 = 0x80, v1 = 1, v2 = 0;

if(x < 2)

return x;

for( ; ; )

{

if(n1)

while(n2 >= n1) /* divide polynomial p2 by p1 */

{

n2 /= n1; /* shift smaller polynomial left */

p2 ^= (p1 * n2) & 0xff; /* and remove from larger one */

v2 ^= (v1 * n2); /* shift accumulated value and */

n2 = hibit(p2); /* add into result */

}

else

return v1;

if(n2) /* repeat with values swapped */

while(n1 >= n2)

{

n1 /= n2;

p1 ^= p2 * n1;

v1 ^= v2 * n1;

n1 = hibit(p1);

}

else

return v2;

}

}

/* The forward and inverse affine transformations used in the S-box */

uint8_t fwd_affine(const uint8_t x)

{

#if defined( HAVE_UINT_32T )

uint32_t w = x;

w ^= (w << 1) ^ (w << 2) ^ (w << 3) ^ (w << 4);

return 0x63 ^ ((w ^ (w >> 8)) & 0xff);

#else

return 0x63 ^ x ^ (x << 1) ^ (x << 2) ^ (x << 3) ^ (x << 4)

^ (x >> 7) ^ (x >> 6) ^ (x >> 5) ^ (x >> 4);

#endif

}

uint8_t inv_affine(const uint8_t x)

{

#if defined( HAVE_UINT_32T )

uint32_t w = x;

w = (w << 1) ^ (w << 3) ^ (w << 6);

return 0x05 ^ ((w ^ (w >> 8)) & 0xff);

#else

return 0x05 ^ (x << 1) ^ (x << 3) ^ (x << 6)

^ (x >> 7) ^ (x >> 5) ^ (x >> 2);

#endif

}

#define s_box(x) fwd_affine(gf_inv(x))

#define is_box(x) gf_inv(inv_affine(x))

#define gfm2_sb(x) f2(s_box(x))

#define gfm3_sb(x) f3(s_box(x))

#define gfm_9(x) f9(x)

#define gfm_b(x) fb(x)

#define gfm_d(x) fd(x)

#define gfm_e(x) fe(x)

#endif

#if defined( HAVE_MEMCPY )

# define block_copy_nn(d, s, l) memcpy(d, s, l)

# define block_copy(d, s) memcpy(d, s, N_BLOCK)

#else

# define block_copy_nn(d, s, l) copy_block_nn(d, s, l)

# define block_copy(d, s) copy_block(d, s)

#endif

static void copy_block( void *d, const void *s )

{

#if defined( HAVE_UINT_32T )

((uint32_t*)d)[ 0] = ((uint32_t*)s)[ 0];

((uint32_t*)d)[ 1] = ((uint32_t*)s)[ 1];

((uint32_t*)d)[ 2] = ((uint32_t*)s)[ 2];

((uint32_t*)d)[ 3] = ((uint32_t*)s)[ 3];

#else

((uint8_t*)d)[ 0] = ((uint8_t*)s)[ 0];

((uint8_t*)d)[ 1] = ((uint8_t*)s)[ 1];

((uint8_t*)d)[ 2] = ((uint8_t*)s)[ 2];

((uint8_t*)d)[ 3] = ((uint8_t*)s)[ 3];

((uint8_t*)d)[ 4] = ((uint8_t*)s)[ 4];

((uint8_t*)d)[ 5] = ((uint8_t*)s)[ 5];

((uint8_t*)d)[ 6] = ((uint8_t*)s)[ 6];

((uint8_t*)d)[ 7] = ((uint8_t*)s)[ 7];

((uint8_t*)d)[ 8] = ((uint8_t*)s)[ 8];

((uint8_t*)d)[ 9] = ((uint8_t*)s)[ 9];

((uint8_t*)d)[10] = ((uint8_t*)s)[10];

((uint8_t*)d)[11] = ((uint8_t*)s)[11];

((uint8_t*)d)[12] = ((uint8_t*)s)[12];

((uint8_t*)d)[13] = ((uint8_t*)s)[13];

((uint8_t*)d)[14] = ((uint8_t*)s)[14];

((uint8_t*)d)[15] = ((uint8_t*)s)[15];

#endif

}

static void copy_block_nn( uint8_t * d, const uint8_t *s, uint8_t nn )

{

while( nn-- )

//*((uint8_t*)d)++ = *((uint8_t*)s)++;

*d++ = *s++;

}

static void xor_block( void *d, const void *s )

{

#if defined( HAVE_UINT_32T )

((uint32_t*)d)[ 0] ^= ((uint32_t*)s)[ 0];

((uint32_t*)d)[ 1] ^= ((uint32_t*)s)[ 1];

((uint32_t*)d)[ 2] ^= ((uint32_t*)s)[ 2];

((uint32_t*)d)[ 3] ^= ((uint32_t*)s)[ 3];

#else

((uint8_t*)d)[ 0] ^= ((uint8_t*)s)[ 0];

((uint8_t*)d)[ 1] ^= ((uint8_t*)s)[ 1];

((uint8_t*)d)[ 2] ^= ((uint8_t*)s)[ 2];

((uint8_t*)d)[ 3] ^= ((uint8_t*)s)[ 3];

((uint8_t*)d)[ 4] ^= ((uint8_t*)s)[ 4];

((uint8_t*)d)[ 5] ^= ((uint8_t*)s)[ 5];

((uint8_t*)d)[ 6] ^= ((uint8_t*)s)[ 6];

((uint8_t*)d)[ 7] ^= ((uint8_t*)s)[ 7];

((uint8_t*)d)[ 8] ^= ((uint8_t*)s)[ 8];

((uint8_t*)d)[ 9] ^= ((uint8_t*)s)[ 9];

((uint8_t*)d)[10] ^= ((uint8_t*)s)[10];

((uint8_t*)d)[11] ^= ((uint8_t*)s)[11];

((uint8_t*)d)[12] ^= ((uint8_t*)s)[12];

((uint8_t*)d)[13] ^= ((uint8_t*)s)[13];

((uint8_t*)d)[14] ^= ((uint8_t*)s)[14];

((uint8_t*)d)[15] ^= ((uint8_t*)s)[15];

#endif

}

static void copy_and_key( void *d, const void *s, const void *k )

{

#if defined( HAVE_UINT_32T )

((uint32_t*)d)[ 0] = ((uint32_t*)s)[ 0] ^ ((uint32_t*)k)[ 0];

((uint32_t*)d)[ 1] = ((uint32_t*)s)[ 1] ^ ((uint32_t*)k)[ 1];

((uint32_t*)d)[ 2] = ((uint32_t*)s)[ 2] ^ ((uint32_t*)k)[ 2];

((uint32_t*)d)[ 3] = ((uint32_t*)s)[ 3] ^ ((uint32_t*)k)[ 3];

#elif 1

((uint8_t*)d)[ 0] = ((uint8_t*)s)[ 0] ^ ((uint8_t*)k)[ 0];

((uint8_t*)d)[ 1] = ((uint8_t*)s)[ 1] ^ ((uint8_t*)k)[ 1];

((uint8_t*)d)[ 2] = ((uint8_t*)s)[ 2] ^ ((uint8_t*)k)[ 2];

((uint8_t*)d)[ 3] = ((uint8_t*)s)[ 3] ^ ((uint8_t*)k)[ 3];

((uint8_t*)d)[ 4] = ((uint8_t*)s)[ 4] ^ ((uint8_t*)k)[ 4];

((uint8_t*)d)[ 5] = ((uint8_t*)s)[ 5] ^ ((uint8_t*)k)[ 5];

((uint8_t*)d)[ 6] = ((uint8_t*)s)[ 6] ^ ((uint8_t*)k)[ 6];

((uint8_t*)d)[ 7] = ((uint8_t*)s)[ 7] ^ ((uint8_t*)k)[ 7];

((uint8_t*)d)[ 8] = ((uint8_t*)s)[ 8] ^ ((uint8_t*)k)[ 8];

((uint8_t*)d)[ 9] = ((uint8_t*)s)[ 9] ^ ((uint8_t*)k)[ 9];

((uint8_t*)d)[10] = ((uint8_t*)s)[10] ^ ((uint8_t*)k)[10];

((uint8_t*)d)[11] = ((uint8_t*)s)[11] ^ ((uint8_t*)k)[11];

((uint8_t*)d)[12] = ((uint8_t*)s)[12] ^ ((uint8_t*)k)[12];

((uint8_t*)d)[13] = ((uint8_t*)s)[13] ^ ((uint8_t*)k)[13];

((uint8_t*)d)[14] = ((uint8_t*)s)[14] ^ ((uint8_t*)k)[14];

((uint8_t*)d)[15] = ((uint8_t*)s)[15] ^ ((uint8_t*)k)[15];

#else

block_copy(d, s);

xor_block(d, k);

#endif

}

static void add_round_key( uint8_t d[N_BLOCK], const uint8_t k[N_BLOCK] )

{

xor_block(d, k);

}

static void shift_sub_rows( uint8_t st[N_BLOCK] )

{ uint8_t tt;

st[ 0] = s_box(st[ 0]); st[ 4] = s_box(st[ 4]);

st[ 8] = s_box(st[ 8]); st[12] = s_box(st[12]);

tt = st[1]; st[ 1] = s_box(st[ 5]); st[ 5] = s_box(st[ 9]);

st[ 9] = s_box(st[13]); st[13] = s_box( tt );

tt = st[2]; st[ 2] = s_box(st[10]); st[10] = s_box( tt );

tt = st[6]; st[ 6] = s_box(st[14]); st[14] = s_box( tt );

tt = st[15]; st[15] = s_box(st[11]); st[11] = s_box(st[ 7]);

st[ 7] = s_box(st[ 3]); st[ 3] = s_box( tt );

}

#if defined( AES_DEC_PREKEYED )

static void inv_shift_sub_rows( uint8_t st[N_BLOCK] )

{ uint8_t tt;

st[ 0] = is_box(st[ 0]); st[ 4] = is_box(st[ 4]);

st[ 8] = is_box(st[ 8]); st[12] = is_box(st[12]);

tt = st[13]; st[13] = is_box(st[9]); st[ 9] = is_box(st[5]);

st[ 5] = is_box(st[1]); st[ 1] = is_box( tt );

tt = st[2]; st[ 2] = is_box(st[10]); st[10] = is_box( tt );

tt = st[6]; st[ 6] = is_box(st[14]); st[14] = is_box( tt );

tt = st[3]; st[ 3] = is_box(st[ 7]); st[ 7] = is_box(st[11]);

st[11] = is_box(st[15]); st[15] = is_box( tt );

}

#endif

#if defined( VERSION_1 )

static void mix_sub_columns( uint8_t dt[N_BLOCK] )

{ uint8_t st[N_BLOCK];

block_copy(st, dt);

#else

static void mix_sub_columns( uint8_t dt[N_BLOCK], uint8_t st[N_BLOCK] )

{

#endif

dt[ 0] = gfm2_sb(st[0]) ^ gfm3_sb(st[5]) ^ s_box(st[10]) ^ s_box(st[15]);

dt[ 1] = s_box(st[0]) ^ gfm2_sb(st[5]) ^ gfm3_sb(st[10]) ^ s_box(st[15]);

dt[ 2] = s_box(st[0]) ^ s_box(st[5]) ^ gfm2_sb(st[10]) ^ gfm3_sb(st[15]);

dt[ 3] = gfm3_sb(st[0]) ^ s_box(st[5]) ^ s_box(st[10]) ^ gfm2_sb(st[15]);

dt[ 4] = gfm2_sb(st[4]) ^ gfm3_sb(st[9]) ^ s_box(st[14]) ^ s_box(st[3]);

dt[ 5] = s_box(st[4]) ^ gfm2_sb(st[9]) ^ gfm3_sb(st[14]) ^ s_box(st[3]);

dt[ 6] = s_box(st[4]) ^ s_box(st[9]) ^ gfm2_sb(st[14]) ^ gfm3_sb(st[3]);

dt[ 7] = gfm3_sb(st[4]) ^ s_box(st[9]) ^ s_box(st[14]) ^ gfm2_sb(st[3]);

dt[ 8] = gfm2_sb(st[8]) ^ gfm3_sb(st[13]) ^ s_box(st[2]) ^ s_box(st[7]);

dt[ 9] = s_box(st[8]) ^ gfm2_sb(st[13]) ^ gfm3_sb(st[2]) ^ s_box(st[7]);

dt[10] = s_box(st[8]) ^ s_box(st[13]) ^ gfm2_sb(st[2]) ^ gfm3_sb(st[7]);

dt[11] = gfm3_sb(st[8]) ^ s_box(st[13]) ^ s_box(st[2]) ^ gfm2_sb(st[7]);

dt[12] = gfm2_sb(st[12]) ^ gfm3_sb(st[1]) ^ s_box(st[6]) ^ s_box(st[11]);

dt[13] = s_box(st[12]) ^ gfm2_sb(st[1]) ^ gfm3_sb(st[6]) ^ s_box(st[11]);

dt[14] = s_box(st[12]) ^ s_box(st[1]) ^ gfm2_sb(st[6]) ^ gfm3_sb(st[11]);

dt[15] = gfm3_sb(st[12]) ^ s_box(st[1]) ^ s_box(st[6]) ^ gfm2_sb(st[11]);

}

#if defined( AES_DEC_PREKEYED )

#if defined( VERSION_1 )

static void inv_mix_sub_columns( uint8_t dt[N_BLOCK] )

{ uint8_t st[N_BLOCK];

block_copy(st, dt);

#else

static void inv_mix_sub_columns( uint8_t dt[N_BLOCK], uint8_t st[N_BLOCK] )

{

#endif

dt[ 0] = is_box(gfm_e(st[ 0]) ^ gfm_b(st[ 1]) ^ gfm_d(st[ 2]) ^ gfm_9(st[ 3]));

dt[ 5] = is_box(gfm_9(st[ 0]) ^ gfm_e(st[ 1]) ^ gfm_b(st[ 2]) ^ gfm_d(st[ 3]));

dt[10] = is_box(gfm_d(st[ 0]) ^ gfm_9(st[ 1]) ^ gfm_e(st[ 2]) ^ gfm_b(st[ 3]));

dt[15] = is_box(gfm_b(st[ 0]) ^ gfm_d(st[ 1]) ^ gfm_9(st[ 2]) ^ gfm_e(st[ 3]));

dt[ 4] = is_box(gfm_e(st[ 4]) ^ gfm_b(st[ 5]) ^ gfm_d(st[ 6]) ^ gfm_9(st[ 7]));

dt[ 9] = is_box(gfm_9(st[ 4]) ^ gfm_e(st[ 5]) ^ gfm_b(st[ 6]) ^ gfm_d(st[ 7]));

dt[14] = is_box(gfm_d(st[ 4]) ^ gfm_9(st[ 5]) ^ gfm_e(st[ 6]) ^ gfm_b(st[ 7]));

dt[ 3] = is_box(gfm_b(st[ 4]) ^ gfm_d(st[ 5]) ^ gfm_9(st[ 6]) ^ gfm_e(st[ 7]));

dt[ 8] = is_box(gfm_e(st[ 8]) ^ gfm_b(st[ 9]) ^ gfm_d(st[10]) ^ gfm_9(st[11]));

dt[13] = is_box(gfm_9(st[ 8]) ^ gfm_e(st[ 9]) ^ gfm_b(st[10]) ^ gfm_d(st[11]));

dt[ 2] = is_box(gfm_d(st[ 8]) ^ gfm_9(st[ 9]) ^ gfm_e(st[10]) ^ gfm_b(st[11]));

dt[ 7] = is_box(gfm_b(st[ 8]) ^ gfm_d(st[ 9]) ^ gfm_9(st[10]) ^ gfm_e(st[11]));

dt[12] = is_box(gfm_e(st[12]) ^ gfm_b(st[13]) ^ gfm_d(st[14]) ^ gfm_9(st[15]));

dt[ 1] = is_box(gfm_9(st[12]) ^ gfm_e(st[13]) ^ gfm_b(st[14]) ^ gfm_d(st[15]));

dt[ 6] = is_box(gfm_d(st[12]) ^ gfm_9(st[13]) ^ gfm_e(st[14]) ^ gfm_b(st[15]));

dt[11] = is_box(gfm_b(st[12]) ^ gfm_d(st[13]) ^ gfm_9(st[14]) ^ gfm_e(st[15]));

}

#endif

#if defined( AES_ENC_PREKEYED ) || defined( AES_DEC_PREKEYED )

/* Set the cipher key for the pre-keyed version */

return_type aes_set_key( const uint8_t key[], length_type keylen, aes_context ctx[1] )

{

uint8_t cc, rc, hi;

switch( keylen )

{

case 16:

case 24:

case 32:

break;

default:

ctx->rnd = 0;

return ( uint8_t )-1;

}

block_copy_nn(ctx->ksch, key, keylen);

hi = (keylen + 28) << 2;

ctx->rnd = (hi >> 4) - 1;

for( cc = keylen, rc = 1; cc < hi; cc += 4 )

{ uint8_t tt, t0, t1, t2, t3;

t0 = ctx->ksch[cc - 4];

t1 = ctx->ksch[cc - 3];

t2 = ctx->ksch[cc - 2];

t3 = ctx->ksch[cc - 1];

if( cc % keylen == 0 )

{

tt = t0;

t0 = s_box(t1) ^ rc;

t1 = s_box(t2);

t2 = s_box(t3);

t3 = s_box(tt);

rc = f2(rc);

}

else if( keylen > 24 && cc % keylen == 16 )

{

t0 = s_box(t0);

t1 = s_box(t1);

t2 = s_box(t2);

t3 = s_box(t3);

}

tt = cc - keylen;

ctx->ksch[cc + 0] = ctx->ksch[tt + 0] ^ t0;

ctx->ksch[cc + 1] = ctx->ksch[tt + 1] ^ t1;

ctx->ksch[cc + 2] = ctx->ksch[tt + 2] ^ t2;

ctx->ksch[cc + 3] = ctx->ksch[tt + 3] ^ t3;

}

return 0;

}

#endif

#if defined( AES_ENC_PREKEYED )

/* Encrypt a single block of 16 bytes */

return_type aes_encrypt( const uint8_t in[N_BLOCK], uint8_t out[N_BLOCK], const aes_context ctx[1] )

{

if( ctx->rnd )

{

uint8_t s1[N_BLOCK], r;

copy_and_key( s1, in, ctx->ksch );

for( r = 1 ; r < ctx->rnd ; ++r )

#if defined( VERSION_1 )

{

mix_sub_columns( s1 );

add_round_key( s1, ctx->ksch + r * N_BLOCK);

}

#else

{ uint8_t s2[N_BLOCK];

mix_sub_columns( s2, s1 );

copy_and_key( s1, s2, ctx->ksch + r * N_BLOCK);

}

#endif

shift_sub_rows( s1 );

copy_and_key( out, s1, ctx->ksch + r * N_BLOCK );

}

else

return ( uint8_t )-1;

return 0;

}

/* CBC encrypt a number of blocks (input and return an IV) */

return_type aes_cbc_encrypt( const uint8_t *in, uint8_t *out,

int32_t n_block, uint8_t iv[N_BLOCK], const aes_context ctx[1] )

{

while(n_block--)

{

xor_block(iv, in);

if(aes_encrypt(iv, iv, ctx) != EXIT_SUCCESS)

return EXIT_FAILURE;

//memcpy(out, iv, N_BLOCK);

block_copy(out, iv);

in += N_BLOCK;

out += N_BLOCK;

}

return EXIT_SUCCESS;

}

#endif

#if defined( AES_DEC_PREKEYED )

/* Decrypt a single block of 16 bytes */

return_type aes_decrypt( const uint8_t in[N_BLOCK], uint8_t out[N_BLOCK], const aes_context ctx[1] )

{

if( ctx->rnd )

{

uint8_t s1[N_BLOCK], r;

copy_and_key( s1, in, ctx->ksch + ctx->rnd * N_BLOCK );

inv_shift_sub_rows( s1 );

for( r = ctx->rnd ; --r ; )

#if defined( VERSION_1 )

{

add_round_key( s1, ctx->ksch + r * N_BLOCK );

inv_mix_sub_columns( s1 );

}

#else

{ uint8_t s2[N_BLOCK];

copy_and_key( s2, s1, ctx->ksch + r * N_BLOCK );

inv_mix_sub_columns( s1, s2 );

}

#endif

copy_and_key( out, s1, ctx->ksch );

}

else

return -1;

return 0;

}

/* CBC decrypt a number of blocks (input and return an IV) */

return_type aes_cbc_decrypt( const uint8_t *in, uint8_t *out,

int32_t n_block, uint8_t iv[N_BLOCK], const aes_context ctx[1] )

{

while(n_block--)

{ uint8_t tmp[N_BLOCK];

//memcpy(tmp, in, N_BLOCK);

block_copy(tmp, in);

if(aes_decrypt(in, out, ctx) != EXIT_SUCCESS)

return EXIT_FAILURE;

xor_block(out, iv);

//memcpy(iv, tmp, N_BLOCK);

block_copy(iv, tmp);

in += N_BLOCK;

out += N_BLOCK;

}

return EXIT_SUCCESS;

}

#endif

#if defined( AES_ENC_128_OTFK )

/* The 'on the fly' encryption key update for for 128 bit keys */

static void update_encrypt_key_128( uint8_t k[N_BLOCK], uint8_t *rc )

{ uint8_t cc;

k[0] ^= s_box(k[13]) ^ *rc;

k[1] ^= s_box(k[14]);

k[2] ^= s_box(k[15]);

k[3] ^= s_box(k[12]);

*rc = f2( *rc );

for(cc = 4; cc < 16; cc += 4 )

{

k[cc + 0] ^= k[cc - 4];

k[cc + 1] ^= k[cc - 3];

k[cc + 2] ^= k[cc - 2];

k[cc + 3] ^= k[cc - 1];

}

}

/* Encrypt a single block of 16 bytes with 'on the fly' 128 bit keying */

void aes_encrypt_128( const uint8_t in[N_BLOCK], uint8_t out[N_BLOCK],

const uint8_t key[N_BLOCK], uint8_t o_key[N_BLOCK] )

{ uint8_t s1[N_BLOCK], r, rc = 1;

if(o_key != key)

block_copy( o_key, key );

copy_and_key( s1, in, o_key );

for( r = 1 ; r < 10 ; ++r )

#if defined( VERSION_1 )

{

mix_sub_columns( s1 );

update_encrypt_key_128( o_key, &rc );

add_round_key( s1, o_key );

}

#else

{ uint8_t s2[N_BLOCK];

mix_sub_columns( s2, s1 );

update_encrypt_key_128( o_key, &rc );

copy_and_key( s1, s2, o_key );

}

#endif

shift_sub_rows( s1 );

update_encrypt_key_128( o_key, &rc );

copy_and_key( out, s1, o_key );

}

#endif

#if defined( AES_DEC_128_OTFK )

/* The 'on the fly' decryption key update for for 128 bit keys */

static void update_decrypt_key_128( uint8_t k[N_BLOCK], uint8_t *rc )

{ uint8_t cc;

for( cc = 12; cc > 0; cc -= 4 )

{

k[cc + 0] ^= k[cc - 4];

k[cc + 1] ^= k[cc - 3];

k[cc + 2] ^= k[cc - 2];

k[cc + 3] ^= k[cc - 1];

}

*rc = d2(*rc);

k[0] ^= s_box(k[13]) ^ *rc;

k[1] ^= s_box(k[14]);

k[2] ^= s_box(k[15]);

k[3] ^= s_box(k[12]);

}

/* Decrypt a single block of 16 bytes with 'on the fly' 128 bit keying */

void aes_decrypt_128( const uint8_t in[N_BLOCK], uint8_t out[N_BLOCK],

const uint8_t key[N_BLOCK], uint8_t o_key[N_BLOCK] )

{

uint8_t s1[N_BLOCK], r, rc = 0x6c;

if(o_key != key)

block_copy( o_key, key );

copy_and_key( s1, in, o_key );

inv_shift_sub_rows( s1 );

for( r = 10 ; --r ; )

#if defined( VERSION_1 )

{

update_decrypt_key_128( o_key, &rc );

add_round_key( s1, o_key );

inv_mix_sub_columns( s1 );

}

#else

{ uint8_t s2[N_BLOCK];

update_decrypt_key_128( o_key, &rc );

copy_and_key( s2, s1, o_key );

inv_mix_sub_columns( s1, s2 );

}

#endif

update_decrypt_key_128( o_key, &rc );

copy_and_key( out, s1, o_key );

}

#endif

#if defined( AES_ENC_256_OTFK )

/* The 'on the fly' encryption key update for for 256 bit keys */

static void update_encrypt_key_256( uint8_t k[2 * N_BLOCK], uint8_t *rc )

{ uint8_t cc;

k[0] ^= s_box(k[29]) ^ *rc;

k[1] ^= s_box(k[30]);

k[2] ^= s_box(k[31]);

k[3] ^= s_box(k[28]);

*rc = f2( *rc );

for(cc = 4; cc < 16; cc += 4)

{

k[cc + 0] ^= k[cc - 4];

k[cc + 1] ^= k[cc - 3];

k[cc + 2] ^= k[cc - 2];

k[cc + 3] ^= k[cc - 1];

}

k[16] ^= s_box(k[12]);

k[17] ^= s_box(k[13]);

k[18] ^= s_box(k[14]);

k[19] ^= s_box(k[15]);

for( cc = 20; cc < 32; cc += 4 )

{

k[cc + 0] ^= k[cc - 4];

k[cc + 1] ^= k[cc - 3];

k[cc + 2] ^= k[cc - 2];

k[cc + 3] ^= k[cc - 1];

}

}

/* Encrypt a single block of 16 bytes with 'on the fly' 256 bit keying */

void aes_encrypt_256( const uint8_t in[N_BLOCK], uint8_t out[N_BLOCK],

const uint8_t key[2 * N_BLOCK], uint8_t o_key[2 * N_BLOCK] )

{

uint8_t s1[N_BLOCK], r, rc = 1;

if(o_key != key)

{

block_copy( o_key, key );

block_copy( o_key + 16, key + 16 );

}

copy_and_key( s1, in, o_key );

for( r = 1 ; r < 14 ; ++r )

#if defined( VERSION_1 )

{

mix_sub_columns(s1);

if( r & 1 )

add_round_key( s1, o_key + 16 );

else

{

update_encrypt_key_256( o_key, &rc );

add_round_key( s1, o_key );

}

}

#else

{ uint8_t s2[N_BLOCK];

mix_sub_columns( s2, s1 );

if( r & 1 )

copy_and_key( s1, s2, o_key + 16 );

else

{

update_encrypt_key_256( o_key, &rc );

copy_and_key( s1, s2, o_key );

}

}

#endif

shift_sub_rows( s1 );

update_encrypt_key_256( o_key, &rc );

copy_and_key( out, s1, o_key );

}

#endif

#if defined( AES_DEC_256_OTFK )

/* The 'on the fly' encryption key update for for 256 bit keys */

static void update_decrypt_key_256( uint8_t k[2 * N_BLOCK], uint8_t *rc )

{ uint8_t cc;

for(cc = 28; cc > 16; cc -= 4)

{

k[cc + 0] ^= k[cc - 4];

k[cc + 1] ^= k[cc - 3];

k[cc + 2] ^= k[cc - 2];

k[cc + 3] ^= k[cc - 1];

}

k[16] ^= s_box(k[12]);

k[17] ^= s_box(k[13]);

k[18] ^= s_box(k[14]);

k[19] ^= s_box(k[15]);

for(cc = 12; cc > 0; cc -= 4)

{

k[cc + 0] ^= k[cc - 4];

k[cc + 1] ^= k[cc - 3];

k[cc + 2] ^= k[cc - 2];

k[cc + 3] ^= k[cc - 1];

}

*rc = d2(*rc);

k[0] ^= s_box(k[29]) ^ *rc;

k[1] ^= s_box(k[30]);

k[2] ^= s_box(k[31]);

k[3] ^= s_box(k[28]);

}

/* Decrypt a single block of 16 bytes with 'on the fly'

256 bit keying

*/

void aes_decrypt_256( const uint8_t in[N_BLOCK], uint8_t out[N_BLOCK],

const uint8_t key[2 * N_BLOCK], uint8_t o_key[2 * N_BLOCK] )

{

uint8_t s1[N_BLOCK], r, rc = 0x80;

if(o_key != key)

{

block_copy( o_key, key );

block_copy( o_key + 16, key + 16 );

}

copy_and_key( s1, in, o_key );

inv_shift_sub_rows( s1 );

for( r = 14 ; --r ; )

#if defined( VERSION_1 )

{

if( ( r & 1 ) )

{

update_decrypt_key_256( o_key, &rc );

add_round_key( s1, o_key + 16 );

}

else

add_round_key( s1, o_key );

inv_mix_sub_columns( s1 );

}

#else

{ uint8_t s2[N_BLOCK];

if( ( r & 1 ) )

{

update_decrypt_key_256( o_key, &rc );

copy_and_key( s2, s1, o_key + 16 );

}

else

copy_and_key( s2, s1, o_key );

inv_mix_sub_columns( s1, s2 );

}

#endif

copy_and_key( out, s1, o_key );

}

#endif