Important changes to forums and questions

All forums and questions are now archived. To start a new conversation or read the latest updates go to forums.mbed.com.

9 years, 7 months ago.

Ethernet TCP Socket: Packet Anomaly (causing slow down and packet loss)

I am attempting to transfer data from a client (the mbed) to a server (PC running Python server). The data is packed into structures comprised of 148 byes (1x32bit unsigned int and 72x16bit unsigned ints) which is designed to transmit once every 500uSeconds. I use the following lines of code to ensure that the mbed transmits, and the DigitalOut flash to indicate the duration.

Target Code

txComplete=1;

while(socket.send_all((char*)&dataOut,sizeof(packetToSend_t))==-1); // send the data

txComplete=0;

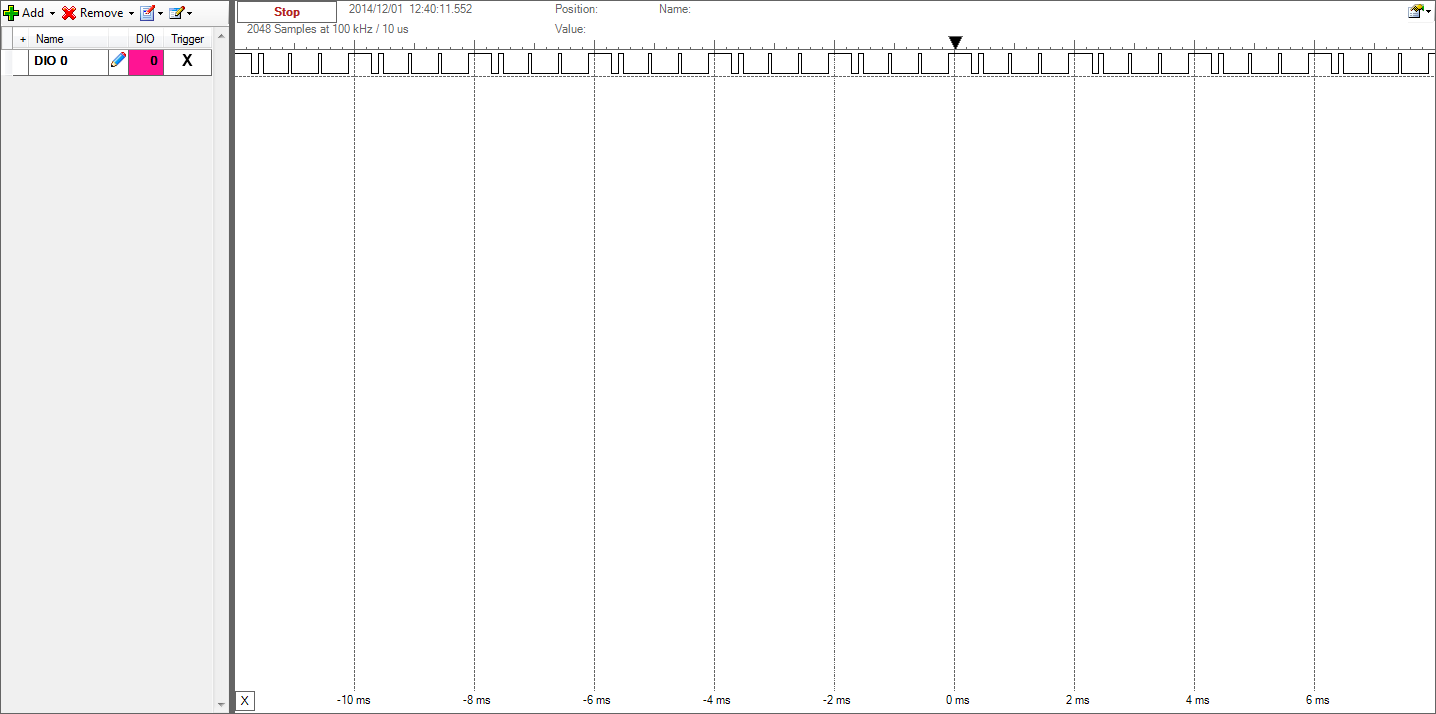

For the most part I have had moderate success in reducing packet loss by increasing the priority of the server on my pc to realtime. However, I can't help but feel like there is some fundamental action I can take on the mbed side to increase speed and function and reduce packet loss. As can be seen in the following graphic (pulled from my logic analyzer) which represents the DigitalOut "txComplete", consistently three transmissions are successful, followed by a fourth that takes a REALLY long time (comparatively). Admittedly my ethernet and networking experience is limited, and perhpas there is some fundamental concept I am missing here. As I understand it, the command "send_all" from the socket library should initiate a transmission of the data and return a -1 only if there is and error. But why would it encounter an error an error with such regularity and in this specific pattern?

Full Code for Reference

/*******************************************************************************************\

\*******************************************************************************************/

#include "mbed.h"

#include "EthernetInterface.h"

//_____________________________________________________________________________Object Dec

EthernetInterface eth; //Requisite Ethernet

TCPSocketConnection socket; //Requisite TCP/IP Socket

Serial pc(USBTX,USBRX); //For debugging

Ticker sampleTick; //Signals beginning of sample period

DigitalOut txComplete(p6); //Pin out for debug

typedef struct packetToSent_s{ //Struct for containing data packets

uint32_t tStamp;

uint16_t data[72];

} packetToSend_t;

packetToSend_t dataOut;

//_____________________________________________________________________________Prototypes

void SampleTick();

//_________________________________________________________________________________Global

int tFlag=0;

uint32_t tCount=0;

//_______________________________________________________________________________________Main

int main(){

char sDurRx [3]; //Incoming Sample Duration

int sDur=0; //Sample Duration in integer form

int i=0;

int j=0;

eth.init("192.168.1.4","255.255.255.0","192.168.1.1"); // Static IP of 192.168.1.4

eth.connect();

socket.connect("192.168.1.5", 1001); //connect to the PC at the given IP address and socket

socket.receive_all(sDurRx,2);

sDurRx[2]=0;

sDur=atoi(sDurRx); //Converts input sample time to integer

int endItAll = sDur*2000;

for (i=0;i<72;i++){

dataOut.data[i]=i;

}

sampleTick.attach(&SampleTick, 0.0005); //Initiate Sample Ticker

while(tCount<=endItAll){

if(tFlag==1){

tFlag = 0; //lower the tick flag

dataOut.tStamp = tCount;

/*for(j=0;j<72;j++){

dataOut.data[j] = rand()%10;

}*/

txComplete=1;

while(socket.send_all((char*)&dataOut,sizeof(packetToSend_t))==-1); // send the data

txComplete=0;

}

}

dataOut.tStamp = 4294967295;

socket.send_all((char*)&dataOut,sizeof(packetToSend_t));

}

//_______________________________________________________________________________________

void SampleTick(){

tFlag = 1; //Raises Tick Flag

tCount++; //Increments thhe tick Counter

}

1 Answer

9 years, 7 months ago.

I haven't [yet] needed this level of performance from the Ethernet stack on the mbed, but I do see that you are using TCP, so I can't help but to wonder if some of that time is in hand-shake with the PC, not just one-way communication. If I'm right, then the PC scheduler is also coming in to play.

If you could monitor the link between the two with WireShark, it would be interesting to see the timing aspects reported there. If there were any unexpected handshakes between the mbed and the PC they would be quite visible.

WireShark has to be on the same network segment.

- This could be done by running WireShark on the server PC that is also running Python.

- This could be done in a 3rd PC running WireShark, but then you'll see a Hub (not a Switch) to put them on the same network (or a managed Switch that will let you log all the traffic on one of the ports to another port).

Neither of these is "free" in that they may induce extra PC (or network) processing and change the behavior. But the constant is the mbed and the logic analyzer, so if either of these experiments affects the overall timing, you'll see that in the logic analyzer and it provides a clue.

Also, I would suggest one more port-pin to wiggle - from inside the SampleTick. No strong reason for monitoring this other than to see confirm if it behaves exactly as expected.