Gesture Controlled Robot

Overview

Georgia Tech ECE 4180 Design Project, Spring 2015.

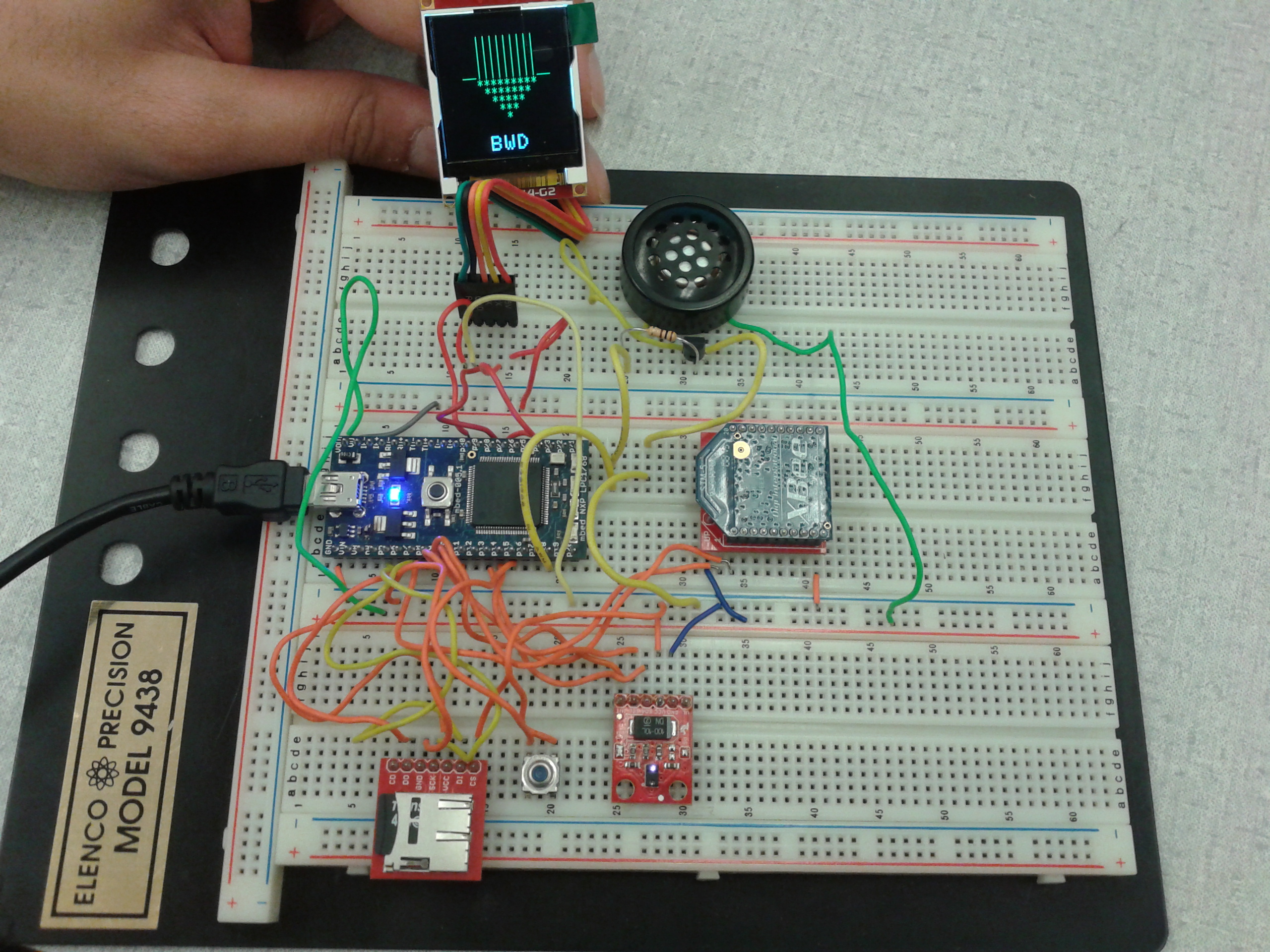

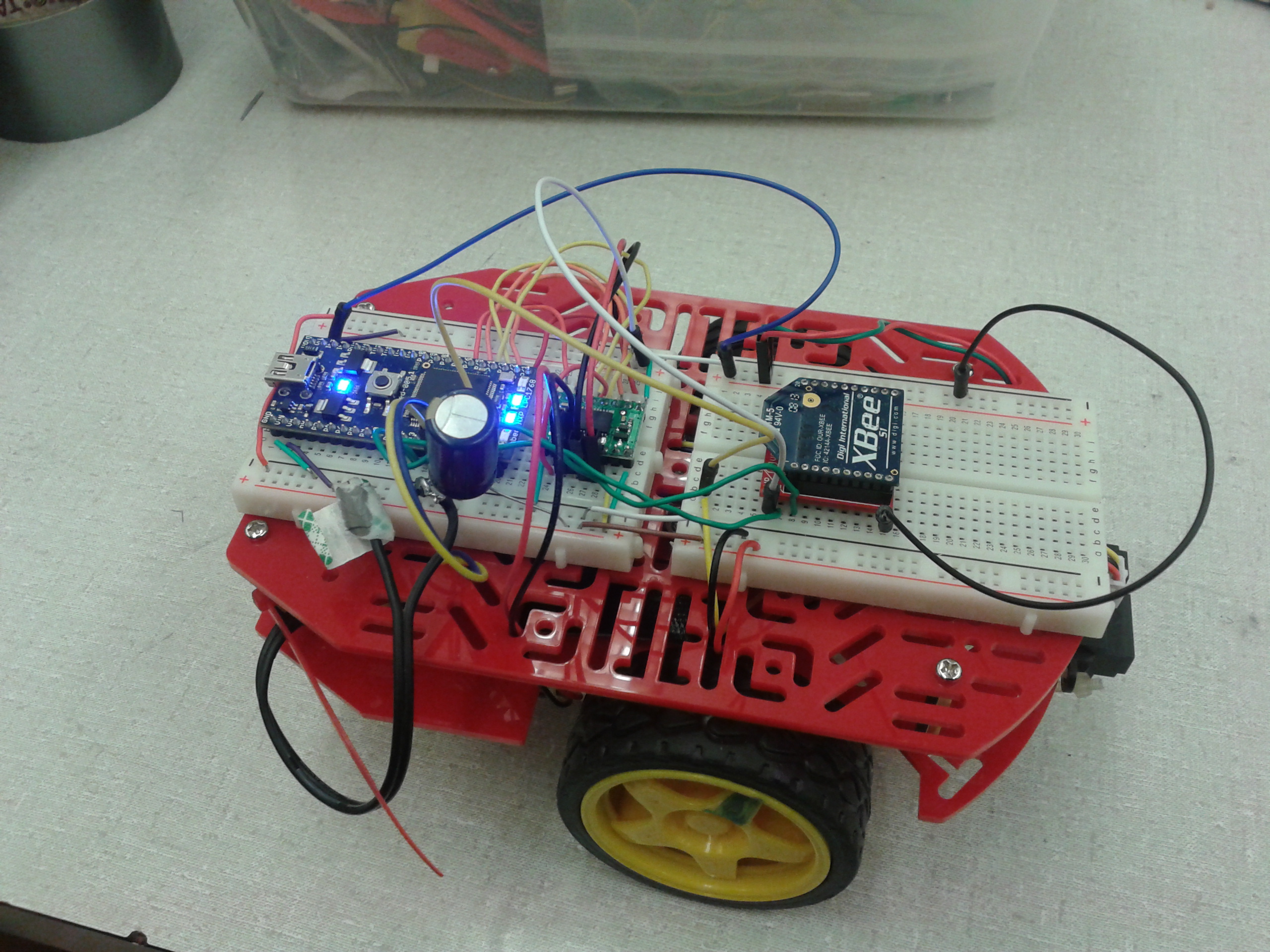

Our project is about wirelessly controlling and steering a Magician Robot using a gesture sensor. Collision avoidance is implemented using IR sensors. A uLCD and speaker are used to provide the appropriate audio and visual feedback to user on a per-gesture basis.

We use two mbeds - one that is hooked up to the gesture sensor and one that drives the Robot - and two Series 1 XBees to establish a wireless link between the two.

Team Members

- Krishan Bhagat

- John Edwards

- Guru Das Srinagesh

- Neha Kadam

Components used

- Magician Robot

- APDS_9960 Digital Proximity, Ambient Light, RGB and Gesture Sensor2 x mbed LPC7168

- 2 x mbed LPC1768

- 2 x XBee S1

- uLCD-144-G2 128 by 128 Smart Color LCD

- Optical wheel encoders

- Batteries

- IR sensor

- Speaker

- SparkFun MicroSD Breakout Board

Code

Controller

The controller receives the gesture input from user. This command is then sent to the robot using the XBee module.

The controller also has audio and video feedback, namely an LCD that displays the command being sent to the robot and a voice output.

Import programGesture_User_Interface

This program is a user interface to send gesture commands to the Magician Robot.

Magician Robot

The robot receives the command sent by the controller and executes it in real time.

Collision avoidance is implemented using IR sesnors. When the sensor returns a value above a certain threshold (0.6 here), the robot sends a message back to the controller saying it detected a collision and waits for a new command.

Import programMagician_Gesture_Controlled_Robot

Post-demo commit. Motor speeds and encoder threshold values reflect the non-linearity of our robot. Users should update motor speeds and encoder values to reflect their own robots.

Demo

Please log in to post comments.