Sample program that can send the recognition data from HVC-P2 to Fujitsu IoT Platform using REST (HTTP)

Dependencies: AsciiFont GR-PEACH_video GraphicsFramework LCD_shield_config R_BSP USBHost_custom easy-connect-gr-peach mbed-http picojson

Information

Here are both English and Japanese description. First, English description is shown followed by Japanese one. 本ページには英語版と日本語版の説明があります。まず英語版、続いて日本語版の説明となります。

Overview

This sample program shows how to send the cognitive data gathered by Omron HVC-P2 (Human Vision Components B5T-007001) to IoT Platform managed by FUJITSU ( http://jp.fujitsu.com/solutions/cloud/k5/function/paas/iot-platform/ ).

Hardware Configuration

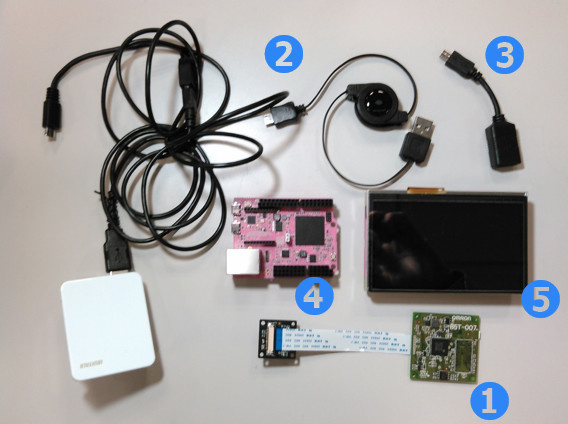

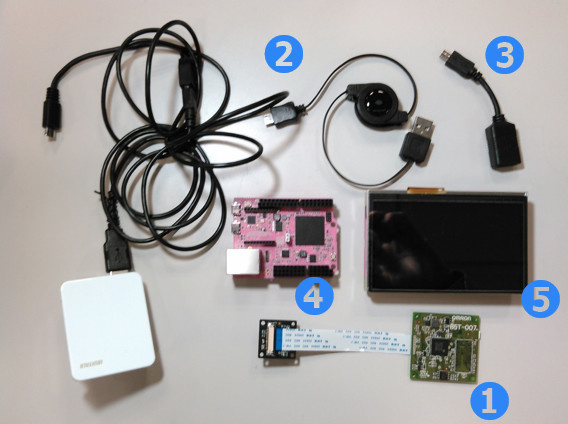

- GR-PEACH 1 set ( https://developer.mbed.org/platforms/Renesas-GR-PEACH/ )

- LCD Shield 1 set ( https://developer.mbed.org/teams/Renesas/Wiki/LCD-shield )

- HVC-P2 1 set ( Human Vision Components B5T-007001 ) ( https://plus-sensin.omron.com/product/B5T-007001/ )

- USBA - Micro USB Cable 2 sets

- USBA (Female) - Micro USB (Male) Adapter 1 set

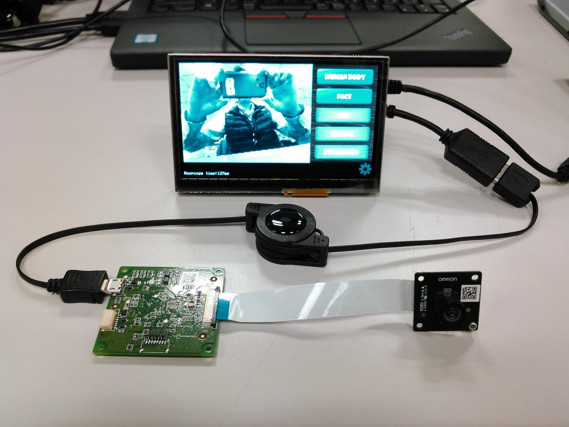

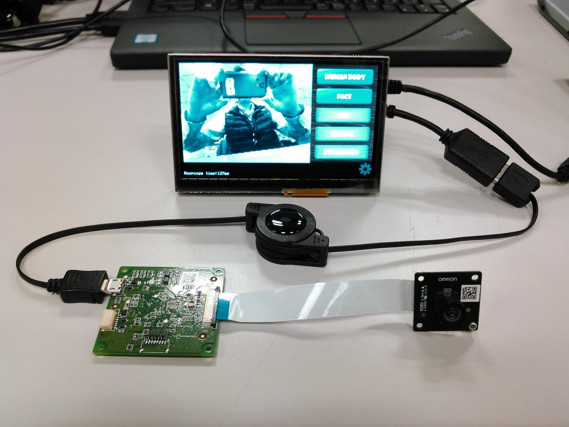

When executing this sample program, please configure the above H/W as shown below:

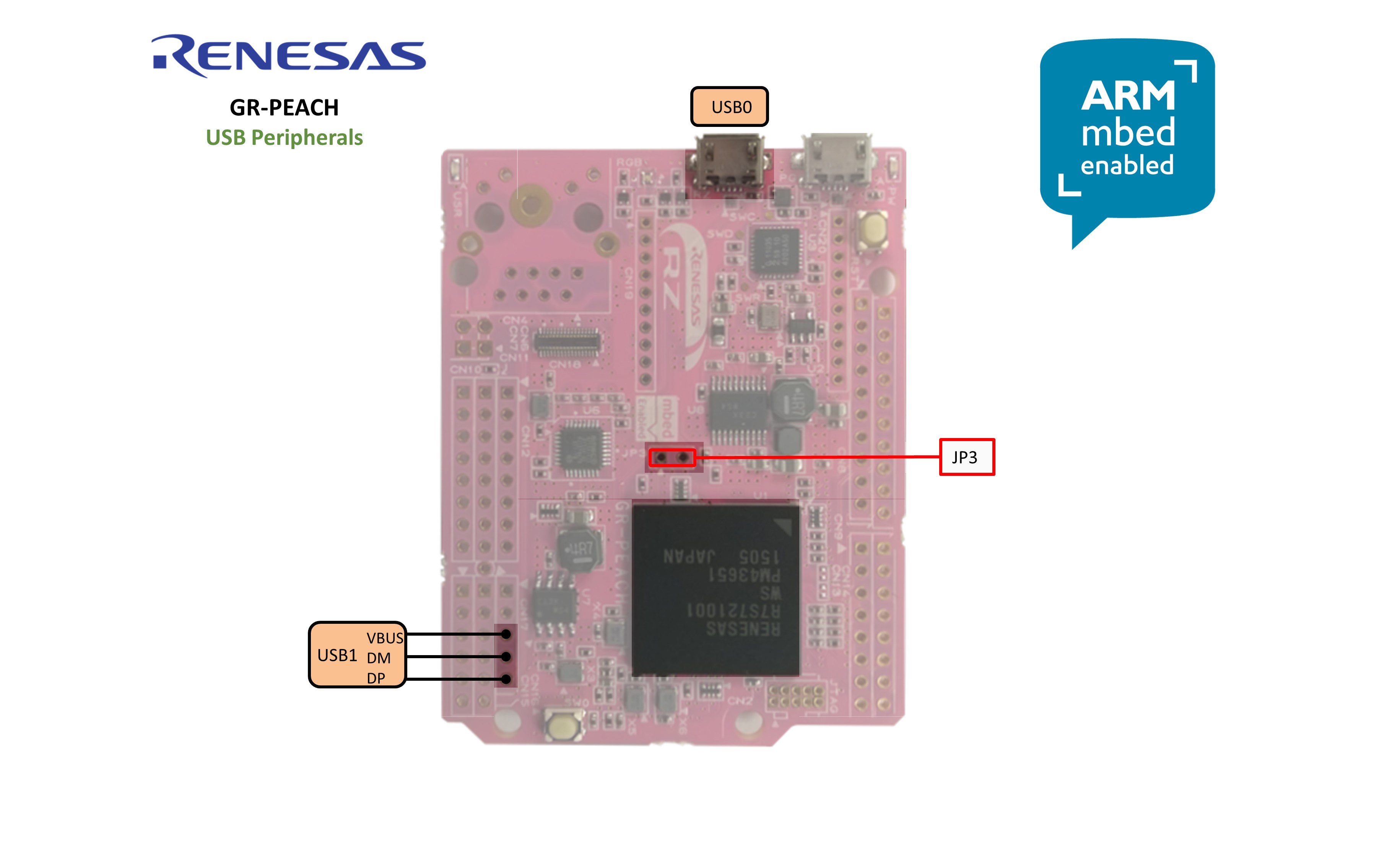

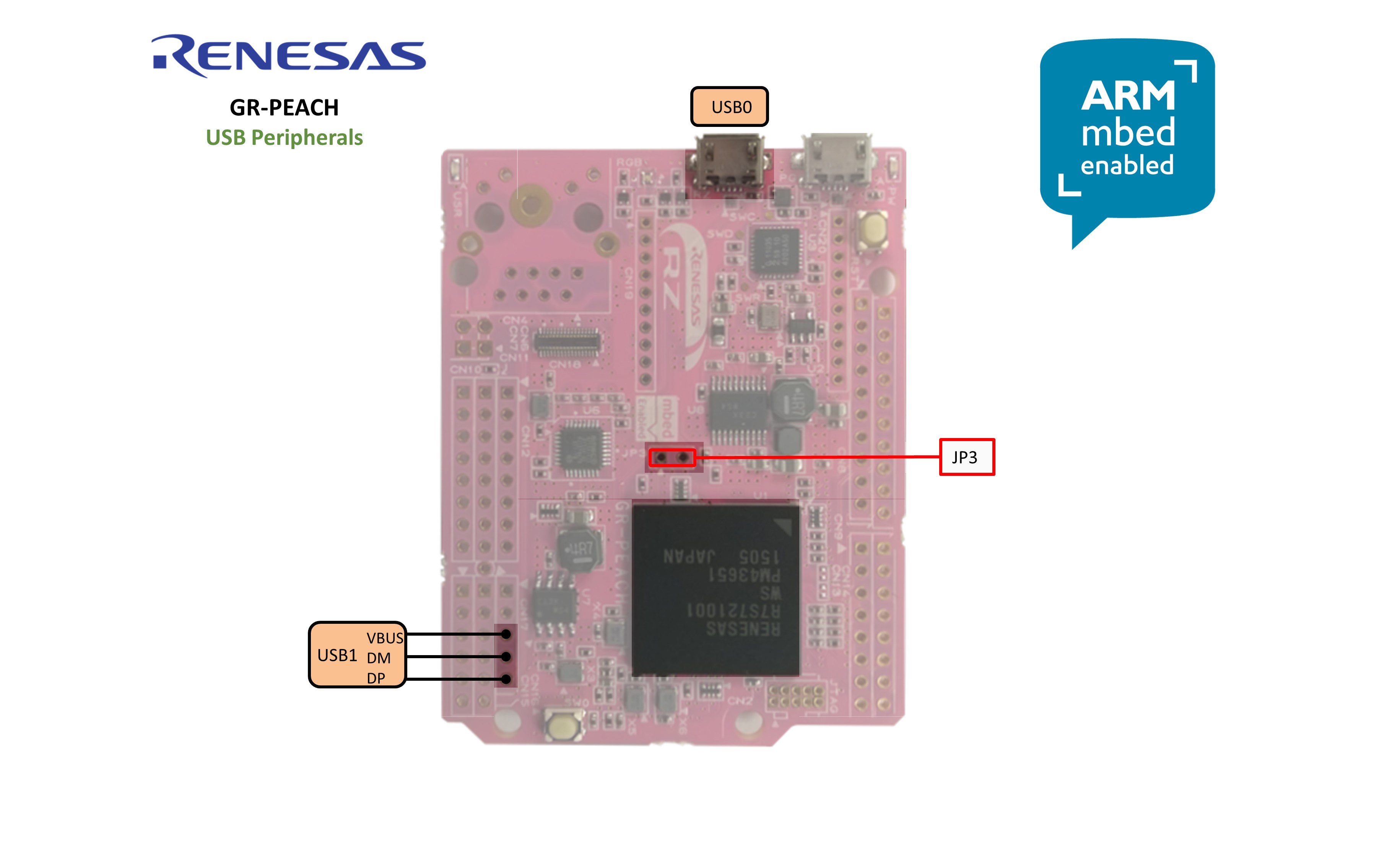

Also, please close JP3 of GR-PEACH as follows:

Application Preconfiguration

- Configure Ethernet settings. For details, please refer to the following link:

https://developer.mbed.org/teams/Renesas/code/GR-PEACH_IoT_Platform_HTTP_sample/wiki/Ethernet-settings - On IoT Platform, set up the resource and its access code where the gathered data would be stored. For details on resource and access code, please refer to the following links:

https://iot-docs.jp-east-1.paas.cloud.global.fujitsu.com/en/manual/userguide_en.pdf

https://iot-docs.jp-east-1.paas.cloud.global.fujitsu.com/en/manual/apireference_en.pdf

https://iot-docs.jp-east-1.paas.cloud.global.fujitsu.com/en/manual/portalmanual_en.pdf

Build Procedure

- Import this sample program onto mbed Compiler

- In GR-PEACH_HVC-P2_IoTPlatform_http/IoT_Platform/iot_platform.cpp, please replace <ACCESS CODE> with the access code you set up on IoT Platform. For details on how to set up Access Code, please refer to the above Application Setup. Then, please delete the line beginning with #error macro.

Access Code configuration

#define ACCESS_CODE <Access CODE> #error "You need to replace <Access CODE for your resource> with yours"

- In GR-PEACH_HVC-P2_IoTPlatform_http/IoT_Platform/iot_platform.cpp, please replace <Base URI>, <Tenant ID> and <Path-to-Resource> below with yours and delete the line beginning with #error macro. For details on <Base URI> and <Tenant ID>, please contact FUJITSU LIMITED. Also, for details on <Path-to-Resource>, please refer to the above Application Setup.

URI configuration

std::string put_uri_base("<Base URI>/v1/<Tenant ID>/<Path-to-Resource>.json");

#error "You need to replace <Base URI>, <Tenant ID> and <Path-to-Resource> with yours"

**snip**

std::string get_uri("<Base URI>/v1/<Tenant ID>/<Path-to-Resource>/_past.json");

#error "You need to replace <Base URI>, <Tenant ID> and <Path-to-Resource> with yours"

- Compile the program. If compilation is successfully terminated, the binary should be downloaded on your PC.

- Plug Ethernet cable into GR-PEACH

- Plug micro-USB cable into the port which lies on the next to RESET button. If GR-PEACH is successfully recognized, it appears as the USB flash disk named mbed as show below:

- Copy the downloaded binary to mbed drive

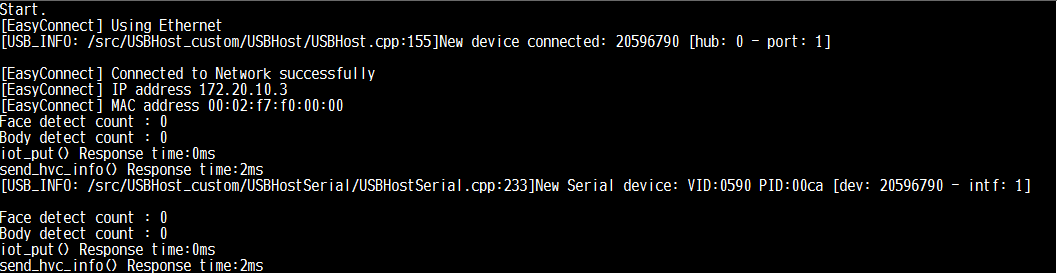

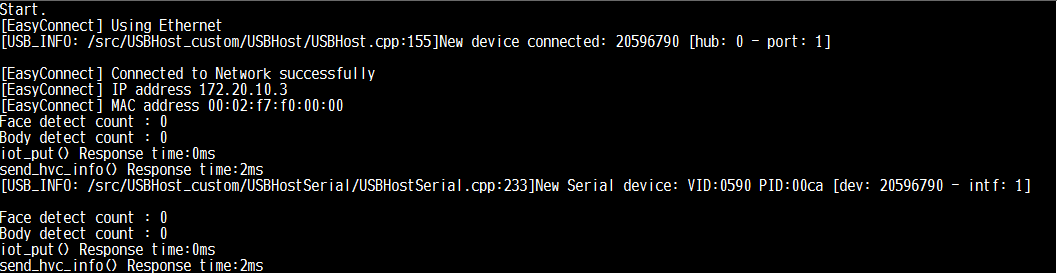

- Press RESET button on GR-PEACH in order to run the program. If it's successfully run, you can see the following message on terminal:

Format of Data to be sent to IoT Platform

In this program, the cognitive data sent from HVC-P2 is serialized in the following JSON format and send it to IoT Platform:

- Face detection data

{

"RecodeType": "HVC-P2(face)"

"id": <GR-PEACH ID>-<Sensor ID>"

"FaceRectangle": {

"Height": xxxx,

"Left": xxxx,

"Top": xxxx,

"Width": xxxx,

},

"Gender": "male" or "female",

"Scores": {

"Anger": zzz,

"Hapiness": zzz,

"Neutral": zzz,

"Sadness": zzz,

"Surprise": zzz

}

}

xxxx: Top-left coordinate, width and height of the rectangle circumscribing the detected face in LCD display coordinate system

zzz: the value indicating the expression estimated from the detected face

//

- Body detection data

{

"RecodeType": "HVC-P2(body)"

"id": <GR-PEACH ID>-<Sensor ID>"

"BodyRectangle": {

"Height": xxxx,

"Left": xxxx,

"Top": xxxx,

"Width": xxxx,

}

}

xxxx: Top-left coordinate, width and height of the rectangle circumscribing the detected body in LCD display coordinate system

概要

本プログラムは、オムロン社製HVC-P2 (Human Vision Components B5T-007001)で収集した各種認識データを、富士通社のIoT Platform ( http://jp.fujitsu.com/solutions/cloud/k5/function/paas/iot-platform/ ) に送信するサンプルプログラムです。

ハードウェア構成

- GR-PEACH 1式 ( https://developer.mbed.org/platforms/Renesas-GR-PEACH/ )

- LCD Shield 1式 ( https://developer.mbed.org/teams/Renesas/Wiki/LCD-shield )

- HVC-P2 1式 ( Human Vision Components B5T-007001 ) ( https://plus-sensin.omron.com/product/B5T-007001/ )

- USBA - Micro USBケーブル 2式

- USBA (メス) - Micro USB (オス)変換アダプタ 1式

本プログラムを動作させる場合、上記H/Wを下図のように接続してください。

また下図に示すGR-PEACHのJP3をショートしてください。

アプリケーションの事前設定

- Ethernetの設定を行います。詳細は下記リンクを参照ください。

https://developer.mbed.org/teams/Renesas/code/GR-PEACH_IoT_Platform_HTTP_sample/wiki/Ethernet-settings - HVC-P2で収集したデータを格納するリソース、およびそのアクセスコードをIoT Platform上で設定します。詳細は下記リンクを参照ください。

https://iot-docs.jp-east-1.paas.cloud.global.fujitsu.com/en/manual/userguide_en.pdf https://iot-docs.jp-east-1.paas.cloud.global.fujitsu.com/en/manual/apireference_en.pdf https://iot-docs.jp-east-1.paas.cloud.global.fujitsu.com/en/manual/portalmanual_en.pdf

ビルド手順

- 本サンプルプログラムをmbed Compilerにインポートします

- 下記に示すGR-PEACH_HVC-P2_IoTPlatform_http/IoT_Platform/iot_platform.cpp中の<ACCESS CODE> を、IoT Platform上で設定したアクセスコードで上書きしてください。<Access Code>の設定方法につきましては、上述のApplication Setupを参照願います。また #errorマクロで始まる行を削除してください。

Access Code configuration

#define ACCESS_CODE <Access CODE> #error "You need to replace <Access CODE for your resource> with yours"

- 下記に示すGR-PEACH_HVC-P2_IoTPlatform_http/IoT_Platform/iot_platform.cpp中の<Base URI>と <Tenant ID>、および<Path-to-Resource>>を適当な値に置換えるとともに、#errorマクロで始まる行を削除してください。ここで、<Base URI>、 <Tenant ID>の詳細につきましては富士通社へご確認願います。また<Path-to-Resource>>につきましては、Application Setupの項を参照ください。

URI configuration

std::string put_uri_base("<Base URI>/v1/<Tenant ID>/<Path-to-Resource>.json");

#error "You need to replace <Base URI>, <Tenant ID> and <Path-to-Resource> with yours"

(中略)

std::string get_uri("<Base URI>/v1/<Tenant ID>/<Path-to-Resource>/_past.json");

#error "You need to replace <Base URI>, <Tenant ID> and <Path-to-Resource> with yours"

- プログラムをコンパイルします。コンパイルが正常終了すると、バイナリがお使いのPCにダウンロードされます。

- GR-PEACHのRJ-45コネクタにEthernetケーブルを差し込んでください。

- USBA - Micro USBケーブルを、GR-PEACHのRESETボタンの隣に配置されたMicro USBポートに差し込んでください。GR-PEACHが正常に認識されると、下図に示すようにGR-PEACHがmbedという名称のUSBドライブとして認識されます。

- ダウンロードしたバイナリをmbedドライブにコピーします。

- RESETボタンを押下してプログラムを実行します。正常に実行された場合、下記に示すメッセージがターミナル上に表示されます。

送信データフォーマット

本プログラムでは、HVC-P2が収集した認識データを下記のJSONフォーマットにシリアライズし、IoT Platformへ送信します。

- Face detection data

{

"RecodeType": "HVC-P2(face)"

"id": <GR-PEACH ID>-<Sensor ID>"

"FaceRectangle": {

"Height": xxxx,

"Left": xxxx,

"Top": xxxx,

"Width": xxxx,

},

"Gender": "male" or "female",

"Scores": {

"Anger": zzz,

"Hapiness": zzz,

"Neutral": zzz,

"Sadness": zzz,

"Surprise": zzz

}

}

xxxx: LCD表示座標系における検出した顔に外接する矩形の左上座標・幅・高さ

zzz: 検出した顔から推定した各種感情を示す数値

//

- Body detection data

{

"RecodeType": "HVC-P2(body)"

"id": <GR-PEACH ID>-<Sensor ID>"

"BodyRectangle": {

"Height": xxxx,

"Left": xxxx,

"Top": xxxx,

"Width": xxxx,

}

}

xxxx: LCD表示座標系における検出した人体に外接する矩形の左上座標・幅・高さ

recognition_proc/recognition_proc.cpp

- Committer:

- Osamu Nakamura

- Date:

- 2017-09-07

- Revision:

- 0:813a237f1c50

File content as of revision 0:813a237f1c50:

#include "mbed.h"

#include "DisplayBace.h"

#include "rtos.h"

#include "AsciiFont.h"

#include "USBHostSerial.h"

#include "LCD_shield_config_4_3inch.h"

#include "recognition_proc.h"

#include "iot_platform.h"

#define UART_SETTING_TIMEOUT 1000 /* HVC setting command signal timeout period */

#define UART_REGIST_EXECUTE_TIMEOUT 7000 /* HVC registration command signal timeout period */

#define UART_EXECUTE_TIMEOUT 10000 /* HVC execute command signal timeout period */

#define SENSOR_ROLL_ANGLE_DEFAULT 0 /* Camera angle setting */

#define USER_ID_NUM_MAX 10

#define ERROR_02 "Error: Number of detected faces is 2 or more"

#define DISP_PIXEL_WIDTH (320)

#define DISP_PIXEL_HEIGHT (240)

/*! Frame buffer stride: Frame buffer stride should be set to a multiple of 32 or 128

in accordance with the frame buffer burst transfer mode. */

/* FRAME BUFFER Parameter GRAPHICS_LAYER_0 */

#define FRAME_BUFFER_BYTE_PER_PIXEL (2u)

#define FRAME_BUFFER_STRIDE (((DISP_PIXEL_WIDTH * FRAME_BUFFER_BYTE_PER_PIXEL) + 31u) & ~31u)

/* RESULT BUFFER Parameter GRAPHICS_LAYER_1 */

#define RESULT_BUFFER_BYTE_PER_PIXEL (2u)

#define RESULT_BUFFER_STRIDE (((DISP_PIXEL_WIDTH * RESULT_BUFFER_BYTE_PER_PIXEL) + 31u) & ~31u)

static bool registrationr_req = false;

static bool setting_req = false;

static recognition_setting_t setting = {

0x1FF,

{ BODY_THRESHOLD_DEFAULT, HAND_THRESHOLD_DEFAULT, FACE_THRESHOLD_DEFAULT, REC_THRESHOLD_DEFAULT},

{ BODY_SIZE_RANGE_MIN_DEFAULT, BODY_SIZE_RANGE_MAX_DEFAULT, HAND_SIZE_RANGE_MIN_DEFAULT,

HAND_SIZE_RANGE_MAX_DEFAULT, FACE_SIZE_RANGE_MIN_DEFAULT, FACE_SIZE_RANGE_MAX_DEFAULT},

FACE_POSE_DEFAULT,

FACE_ANGLE_DEFAULT

};

static USBHostSerial serial;

static InterruptIn button(USER_BUTTON0);

#if defined(__ICCARM__)

/* 32 bytes aligned */

#pragma data_alignment=32

static uint8_t user_frame_buffer0[FRAME_BUFFER_STRIDE * DISP_PIXEL_HEIGHT]@ ".mirrorram";

#pragma data_alignment=32

static uint8_t user_frame_buffer_result[RESULT_BUFFER_STRIDE * DISP_PIXEL_HEIGHT]@ ".mirrorram";

#else

/* 32 bytes aligned */

static uint8_t user_frame_buffer0[FRAME_BUFFER_STRIDE * DISP_PIXEL_HEIGHT]__attribute((section("NC_BSS"),aligned(32)));

static uint8_t user_frame_buffer_result[RESULT_BUFFER_STRIDE * LCD_PIXEL_HEIGHT]__attribute((section("NC_BSS"),aligned(32)));

#endif

static AsciiFont ascii_font(user_frame_buffer_result, DISP_PIXEL_WIDTH, LCD_PIXEL_HEIGHT,

RESULT_BUFFER_STRIDE, RESULT_BUFFER_BYTE_PER_PIXEL, 0x00000090);

static INT32 imageNo_setting = HVC_EXECUTE_IMAGE_QVGA_HALF;

static int str_draw_x = 0;

static int str_draw_y = 0;

/* IoT ready semaphore */

Semaphore iot_ready_semaphore(1);

int semaphore_wait_ret;

/****** Image Recognition ******/

extern "C" int UART_SendData(int inDataSize, UINT8 *inData) {

return serial.writeBuf((char *)inData, inDataSize);

}

extern "C" int UART_ReceiveData(int inTimeOutTime, int inDataSize, UINT8 *outResult) {

return serial.readBuf((char *)outResult, inDataSize, inTimeOutTime);

}

void SetRegistrationrReq(void) {

registrationr_req = true;

}

void SetSettingReq(void) {

setting_req = true;

}

recognition_setting_t * GetRecognitionSettingPointer(void) {

return &setting;

}

static void EraseImage(void) {

uint32_t i = 0;

while (i < sizeof(user_frame_buffer0)) {

user_frame_buffer0[i++] = 0x10;

user_frame_buffer0[i++] = 0x80;

}

}

static void DrawImage(int x, int y, int nWidth, int nHeight, UINT8 *unImageBuffer, int magnification) {

int idx_base;

int idx_w = 0;

int wk_tmp = 0;

int i;

int j;

int k;

int idx_r = 0;

if (magnification <= 0) {

return;

}

idx_base = (x + (DISP_PIXEL_WIDTH * y)) * RESULT_BUFFER_BYTE_PER_PIXEL;

for (i = 0; i < nHeight; i++) {

idx_w = idx_base + (DISP_PIXEL_WIDTH * RESULT_BUFFER_BYTE_PER_PIXEL * i) * magnification;

wk_tmp = idx_w;

for (j = 0; j < nWidth; j++) {

for (k = 0; k < magnification; k++) {

user_frame_buffer0[idx_w] = unImageBuffer[idx_r];

idx_w += 2;

}

idx_r++;

}

for (k = 1; k < magnification; k++) {

memcpy(&user_frame_buffer0[wk_tmp + (DISP_PIXEL_WIDTH * RESULT_BUFFER_BYTE_PER_PIXEL * k)], &user_frame_buffer0[wk_tmp], idx_w - wk_tmp);

}

}

}

static void DrawSquare(int x, int y, int size, uint32_t const colour) {

int wk_x;

int wk_y;

int wk_w = 0;

int wk_h = 0;

int idx_base;

int wk_idx;

int i;

int j;

uint8_t coller_pix[RESULT_BUFFER_BYTE_PER_PIXEL]; /* ARGB4444 */

bool l_draw = true;

bool r_draw = true;

bool t_draw = true;

bool b_draw = true;

if ((x - (size / 2)) < 0) {

l_draw = false;

wk_w += x;

wk_x = 0;

} else {

wk_w += (size / 2);

wk_x = x - (size / 2);

}

if ((x + (size / 2)) >= 1600) {

r_draw = false;

wk_w += (1600 - x);

} else {

wk_w += (size / 2);

}

if ((y - (size / 2)) < 0) {

t_draw = false;

wk_h += y;

wk_y = 0;

} else {

wk_h += (size / 2);

wk_y = y - (size / 2);

}

if ((y + (size / 2)) >= 1200) {

b_draw = false;

wk_h += (1200 - y);

} else {

wk_h += (size / 2);

}

wk_x = wk_x / 5;

wk_y = wk_y / 5;

wk_w = wk_w / 5;

wk_h = wk_h / 5;

if ((colour == 0x0000f0f0) || (colour == 0x0000fff4)) {

str_draw_x = wk_x;

str_draw_y = wk_y + wk_h + 1;

}

idx_base = (wk_x + (DISP_PIXEL_WIDTH * wk_y)) * RESULT_BUFFER_BYTE_PER_PIXEL;

/* Select color */

coller_pix[0] = (colour >> 8) & 0xff; /* 4:Green 4:Blue */

coller_pix[1] = colour & 0xff; /* 4:Alpha 4:Red */

/* top */

if (t_draw) {

wk_idx = idx_base;

for (j = 0; j < wk_w; j++) {

user_frame_buffer_result[wk_idx++] = coller_pix[0];

user_frame_buffer_result[wk_idx++] = coller_pix[1];

}

}

/* middle */

for (i = 1; i < (wk_h - 1); i++) {

wk_idx = idx_base + (DISP_PIXEL_WIDTH * RESULT_BUFFER_BYTE_PER_PIXEL * i);

if (l_draw) {

user_frame_buffer_result[wk_idx + 0] = coller_pix[0];

user_frame_buffer_result[wk_idx + 1] = coller_pix[1];

}

wk_idx += (wk_w - 1) * 2;

if (r_draw) {

user_frame_buffer_result[wk_idx + 0] = coller_pix[0];

user_frame_buffer_result[wk_idx + 1] = coller_pix[1];

}

}

/* bottom */

if (b_draw) {

wk_idx = idx_base + (DISP_PIXEL_WIDTH * RESULT_BUFFER_BYTE_PER_PIXEL * (wk_h - 1));

for (j = 0; j < wk_w; j++) {

user_frame_buffer_result[wk_idx++] = coller_pix[0];

user_frame_buffer_result[wk_idx++] = coller_pix[1];

}

}

}

static void DrawString(const char * str, uint32_t const colour) {

ascii_font.Erase(0x00000090, str_draw_x, str_draw_y,

(AsciiFont::CHAR_PIX_WIDTH * strlen(str) + 2),

(AsciiFont::CHAR_PIX_HEIGHT + 2));

ascii_font.DrawStr(str, str_draw_x + 1, str_draw_y + 1, colour, 1);

str_draw_y += AsciiFont::CHAR_PIX_HEIGHT + 1;

}

static void button_fall(void) {

if (imageNo_setting == HVC_EXECUTE_IMAGE_NONE) {

imageNo_setting = HVC_EXECUTE_IMAGE_QVGA_HALF;

} else if (imageNo_setting == HVC_EXECUTE_IMAGE_QVGA_HALF) {

imageNo_setting = HVC_EXECUTE_IMAGE_QVGA;

} else {

imageNo_setting = HVC_EXECUTE_IMAGE_NONE;

}

}

void init_recognition_layers(DisplayBase * p_display) {

DisplayBase::rect_t rect;

/* The layer by which the image is drawn */

rect.vs = 0;

rect.vw = DISP_PIXEL_HEIGHT;

rect.hs = 0;

rect.hw = DISP_PIXEL_WIDTH;

p_display->Graphics_Read_Setting(

DisplayBase::GRAPHICS_LAYER_0,

(void *)user_frame_buffer0,

FRAME_BUFFER_STRIDE,

DisplayBase::GRAPHICS_FORMAT_YCBCR422,

DisplayBase::WR_RD_WRSWA_32_16BIT,

&rect

);

p_display->Graphics_Start(DisplayBase::GRAPHICS_LAYER_0);

/* The layer by which the image recognition is drawn */

rect.vs = 0;

rect.vw = LCD_PIXEL_HEIGHT;

rect.hs = 0;

rect.hw = DISP_PIXEL_WIDTH;

p_display->Graphics_Read_Setting(

DisplayBase::GRAPHICS_LAYER_1,

(void *)user_frame_buffer_result,

RESULT_BUFFER_STRIDE,

DisplayBase::GRAPHICS_FORMAT_ARGB4444,

DisplayBase::WR_RD_WRSWA_32_16BIT,

&rect

);

p_display->Graphics_Start(DisplayBase::GRAPHICS_LAYER_1);

}

void recognition_task(DisplayBase * p_display) {

INT32 ret = 0;

UINT8 status;

HVC_VERSION version;

HVC_RESULT *pHVCResult = NULL;

HVC_IMAGE *pImage = NULL;

INT32 execFlag;

INT32 imageNo;

INT32 userID;

INT32 next_userID;

INT32 dataID;

const char *pExStr[] = {"?", "Neutral", "Happiness", "Surprise", "Anger", "Sadness"};

uint32_t i;

char Str_disp[32];

Timer resp_time;

/* Register the button */

button.fall(&button_fall);

/* Initializing Recognition layers */

EraseImage();

memset(user_frame_buffer_result, 0, sizeof(user_frame_buffer_result));

init_recognition_layers(p_display);

/* Result Structure Allocation */

pHVCResult = (HVC_RESULT *)malloc(sizeof(HVC_RESULT));

if (pHVCResult == NULL) {

printf("Memory Allocation Error : %08x\n", sizeof(HVC_RESULT));

mbed_die();

}

/* Image Structure allocation */

pImage = (HVC_IMAGE *)malloc(sizeof(HVC_IMAGE));

if (pImage == NULL) {

printf("Memory Allocation Error : %08x\n", sizeof(HVC_RESULT));

mbed_die();

}

while (1) {

/* try to connect a serial device */

while (!serial.connect()) {

Thread::wait(500);

}

serial.baud(921600);

setting_req = true;

do {

/* Initializing variables */

next_userID = 0;

dataID = 0;

/* Get Model and Version */

ret = HVC_GetVersion(UART_SETTING_TIMEOUT, &version, &status);

if ((ret != 0) || (status != 0)) {

break;

}

while (1) {

if (!serial.connected()) {

break;

}

/* Execute Setting */

if (setting_req) {

setting_req = false;

/* Set Camera Angle */

ret = HVC_SetCameraAngle(UART_SETTING_TIMEOUT, SENSOR_ROLL_ANGLE_DEFAULT, &status);

if ((ret != 0) || (status != 0)) {

break;

}

/* Set Threshold Values */

ret = HVC_SetThreshold(UART_SETTING_TIMEOUT, &setting.threshold, &status);

if ((ret != 0) || (status != 0)) {

break;

}

ret = HVC_GetThreshold(UART_SETTING_TIMEOUT, &setting.threshold, &status);

if ((ret != 0) || (status != 0)) {

break;

}

/* Set Detection Size */

ret = HVC_SetSizeRange(UART_SETTING_TIMEOUT, &setting.sizeRange, &status);

if ((ret != 0) || (status != 0)) {

break;

}

ret = HVC_GetSizeRange(UART_SETTING_TIMEOUT, &setting.sizeRange, &status);

if ((ret != 0) || (status != 0)) {

break;

}

/* Set Face Angle */

ret = HVC_SetFaceDetectionAngle(UART_SETTING_TIMEOUT, setting.pose, setting.angle, &status);

if ((ret != 0) || (status != 0)) {

break;

}

ret = HVC_GetFaceDetectionAngle(UART_SETTING_TIMEOUT, &setting.pose, &setting.angle, &status);

if ((ret != 0) || (status != 0)) {

break;

}

}

/* Execute Registration */

if (registrationr_req) {

int wk_width;

if ((pHVCResult->fdResult.num == 1) && (pHVCResult->fdResult.fcResult[0].recognitionResult.uid >= 0)) {

userID = pHVCResult->fdResult.fcResult[0].recognitionResult.uid;

} else {

userID = next_userID;

}

ret = HVC_Registration(UART_REGIST_EXECUTE_TIMEOUT, userID, dataID, pImage, &status);

if ((ret == 0) && (status == 0)) {

if (userID == next_userID) {

next_userID++;

if (next_userID >= USER_ID_NUM_MAX) {

next_userID = 0;

}

}

memset(user_frame_buffer_result, 0, sizeof(user_frame_buffer_result));

DrawImage(128, 88, pImage->width, pImage->height, pImage->image, 1);

memset(Str_disp, 0, sizeof(Str_disp));

sprintf(Str_disp, "USER%03d", userID + 1);

wk_width = (AsciiFont::CHAR_PIX_WIDTH * strlen(Str_disp)) + 2;

ascii_font.Erase(0x00000090, (DISP_PIXEL_WIDTH - wk_width) / 2, 153, wk_width, (AsciiFont::CHAR_PIX_HEIGHT + 2));

ascii_font.DrawStr(Str_disp, (DISP_PIXEL_WIDTH - wk_width) / 2 + 1, 154, 0x0000ffff, 1);

Thread::wait(1200);

} else {

if (status == 0x02) {

wk_width = (AsciiFont::CHAR_PIX_WIDTH * (sizeof(ERROR_02) - 1)) + 4;

ascii_font.Erase(0x00000090, (DISP_PIXEL_WIDTH - wk_width) / 2, 120, wk_width, (AsciiFont::CHAR_PIX_HEIGHT + 3));

ascii_font.DrawStr(ERROR_02, (DISP_PIXEL_WIDTH - wk_width) / 2 + 2, 121, 0x0000ffff, 1);

Thread::wait(1500);

}

}

registrationr_req = false;

}

/* Execute Detection */

semaphore_wait_ret = iot_ready_semaphore.wait();

if(semaphore_wait_ret == -1)

{

printf("<recog_proc> semaphore error.\n");

}else{

execFlag = setting.execFlag;

if ((execFlag & HVC_ACTIV_FACE_DETECTION) == 0) {

execFlag &= ~(HVC_ACTIV_AGE_ESTIMATION | HVC_ACTIV_GENDER_ESTIMATION | HVC_ACTIV_EXPRESSION_ESTIMATION);

}

imageNo = imageNo_setting;

resp_time.reset();

resp_time.start();

ret = HVC_ExecuteEx(UART_EXECUTE_TIMEOUT, execFlag, imageNo, pHVCResult, &status);

resp_time.stop();

if ((ret == 0) && (status == 0)) {

if (imageNo == HVC_EXECUTE_IMAGE_QVGA_HALF) {

DrawImage(0, 0, pHVCResult->image.width, pHVCResult->image.height, pHVCResult->image.image, 2);

} else if (imageNo == HVC_EXECUTE_IMAGE_QVGA) {

DrawImage(0, 0, pHVCResult->image.width, pHVCResult->image.height, pHVCResult->image.image, 1);

} else {

EraseImage();

}

memset(user_frame_buffer_result, 0, sizeof(user_frame_buffer_result));

if (pHVCResult->executedFunc & HVC_ACTIV_BODY_DETECTION) {

/* Body Detection result */

result_hvcp2_bd_cnt = pHVCResult->bdResult.num;

for (i = 0; i < pHVCResult->bdResult.num; i++) {

DrawSquare(pHVCResult->bdResult.bdResult[i].posX,

pHVCResult->bdResult.bdResult[i].posY,

pHVCResult->bdResult.bdResult[i].size,

0x000000ff);

result_hvcp2_bd[i].body_rectangle.MinX = pHVCResult->bdResult.bdResult[i].posX;

result_hvcp2_bd[i].body_rectangle.MinY = pHVCResult->bdResult.bdResult[i].posY;

result_hvcp2_bd[i].body_rectangle.Width = pHVCResult->bdResult.bdResult[i].size;

result_hvcp2_bd[i].body_rectangle.Height = pHVCResult->bdResult.bdResult[i].size;

}

}

/* Face Detection result */

if (pHVCResult->executedFunc &

(HVC_ACTIV_FACE_DETECTION | HVC_ACTIV_FACE_DIRECTION |

HVC_ACTIV_AGE_ESTIMATION | HVC_ACTIV_GENDER_ESTIMATION |

HVC_ACTIV_GAZE_ESTIMATION | HVC_ACTIV_BLINK_ESTIMATION |

HVC_ACTIV_EXPRESSION_ESTIMATION | HVC_ACTIV_FACE_RECOGNITION)){

/* Face Detection result */

result_hvcp2_fd_cnt = pHVCResult->fdResult.num;

for (i = 0; i < pHVCResult->fdResult.num; i++) {

if (pHVCResult->executedFunc & HVC_ACTIV_FACE_DETECTION) {

uint32_t detection_colour = 0x0000f0f0; /* green */

if (pHVCResult->executedFunc & HVC_ACTIV_FACE_RECOGNITION) {

if (pHVCResult->fdResult.fcResult[i].recognitionResult.uid >= 0) {

detection_colour = 0x0000fff4; /* blue */

}

}

/* Detection */

DrawSquare(pHVCResult->fdResult.fcResult[i].dtResult.posX,

pHVCResult->fdResult.fcResult[i].dtResult.posY,

pHVCResult->fdResult.fcResult[i].dtResult.size,

detection_colour);

result_hvcp2_fd[i].face_rectangle.MinX = pHVCResult->fdResult.fcResult[i].dtResult.posX;

result_hvcp2_fd[i].face_rectangle.MinY = pHVCResult->fdResult.fcResult[i].dtResult.posY;

result_hvcp2_fd[i].face_rectangle.Width = pHVCResult->fdResult.fcResult[i].dtResult.size;

result_hvcp2_fd[i].face_rectangle.Height = pHVCResult->fdResult.fcResult[i].dtResult.size;

}

if (pHVCResult->executedFunc & HVC_ACTIV_FACE_RECOGNITION) {

/* Recognition */

if (-128 == pHVCResult->fdResult.fcResult[i].recognitionResult.uid) {

DrawString("Not possible", 0x0000f0ff);

} else if (pHVCResult->fdResult.fcResult[i].recognitionResult.uid < 0) {

DrawString("Not registered", 0x0000f0ff);

} else {

memset(Str_disp, 0, sizeof(Str_disp));

sprintf(Str_disp, "USER%03d", pHVCResult->fdResult.fcResult[i].recognitionResult.uid + 1);

DrawString(Str_disp, 0x0000f0ff);

}

}

if (pHVCResult->executedFunc & HVC_ACTIV_AGE_ESTIMATION) {

/* Age */

if (-128 != pHVCResult->fdResult.fcResult[i].ageResult.age) {

memset(Str_disp, 0, sizeof(Str_disp));

sprintf(Str_disp, "Age:%d", pHVCResult->fdResult.fcResult[i].ageResult.age);

DrawString(Str_disp, 0x0000f0ff);

}

}

if (pHVCResult->executedFunc & HVC_ACTIV_GENDER_ESTIMATION) {

/* Gender */

if (-128 != pHVCResult->fdResult.fcResult[i].genderResult.gender) {

if (1 == pHVCResult->fdResult.fcResult[i].genderResult.gender) {

DrawString("Male", 0x0000fff4);

} else {

DrawString("Female", 0x00006dff);

}

}

}

if (pHVCResult->executedFunc & HVC_ACTIV_EXPRESSION_ESTIMATION) {

/* Expression */

if (-128 != pHVCResult->fdResult.fcResult[i].expressionResult.score[0]) {

uint32_t colour;

result_hvcp2_fd[i].scores.score_neutral = pHVCResult->fdResult.fcResult[i].expressionResult.score[0];

result_hvcp2_fd[i].scores.score_anger = pHVCResult->fdResult.fcResult[i].expressionResult.score[1];

result_hvcp2_fd[i].scores.score_happiness = pHVCResult->fdResult.fcResult[i].expressionResult.score[2];

result_hvcp2_fd[i].scores.score_surprise = pHVCResult->fdResult.fcResult[i].expressionResult.score[3];

result_hvcp2_fd[i].scores.score_sadness = pHVCResult->fdResult.fcResult[i].expressionResult.score[4];

result_hvcp2_fd[i].age.age = pHVCResult->fdResult.fcResult[i].ageResult.age;

result_hvcp2_fd[i].gender.gender = pHVCResult->fdResult.fcResult[i].genderResult.gender;

if (pHVCResult->fdResult.fcResult[i].expressionResult.topExpression > EX_SADNESS) {

pHVCResult->fdResult.fcResult[i].expressionResult.topExpression = 0;

}

switch (pHVCResult->fdResult.fcResult[i].expressionResult.topExpression) {

case 1: colour = 0x0000ffff; break; /* white */

case 2: colour = 0x0000f0ff; break; /* yellow */

case 3: colour = 0x000060ff; break; /* orange */

case 4: colour = 0x00000fff; break; /* purple */

case 5: colour = 0x0000fff4; break; /* blue */

default: colour = 0x0000ffff; break; /* white */

}

DrawString(pExStr[pHVCResult->fdResult.fcResult[i].expressionResult.topExpression], colour);

}

}

}

}

}

iot_ready_semaphore.release();

}

/* Response time */

memset(Str_disp, 0, sizeof(Str_disp));

sprintf(Str_disp, "Response time:%dms", resp_time.read_ms());

ascii_font.Erase(0, 0, 0, 0, 0);

ascii_font.DrawStr(Str_disp, 0, LCD_PIXEL_HEIGHT - AsciiFont::CHAR_PIX_HEIGHT, 0x0000ffff, 1);

}

} while(0);

EraseImage();

memset(user_frame_buffer_result, 0, sizeof(user_frame_buffer_result));

}

}